Generation Cybersecurity: What You Should Know, and Be Doing About It

A professional engineer specializing in the cybersecurity of industrial control systems explains cybersecurity controls that should be present at every generation plant and why they are needed for basic risk reduction from everyday cybersecurity threats.

Cybersecurity has become a topic of interest over the past year in generation, owing to new developments in North American Electric Reliability Corp. Critical Infrastructure Protection (NERC CIP) regulations, awareness of the vulnerability of generation control systems, and several incidents that have caused downtime and production issues for generation owners.

The intent of this article is to help generation owners and operators understand what cybersecurity vulnerability is and to outline some basic first steps in reducing the risk to production from cybersecurity vulnerabilities.

Vulnerabilities and Exploits

Cybersecurity issues are not like issues normally seen in a control system. Excepting human performance issues, control system events are natural—they evolve from the physical characteristics of the system under control. Engineers have studied these problems and created mechanisms that respond before the conditions affect production. But these problems involve physical laws and forces that are deterministic and (to an extent) predictable.

Cybersecurity issues are different; they involve a human intelligence. Though the forces of nature are impressive, they are not clever, nor resourceful, nor cunning. Exploits and vulnerabilities are created to defy and change the rules of systems they target (see sidebar “How the Rules Have Changed”).

| How the Rules Have Changed

The best real-world example of how the rules can change from a cybersecurity event is Stuxnet. Stuxnet has been extensively studied from a control systems perspective by Ralph Langner, who first identified that the virus was targeting Siemens controllers. In Langner’s final paper on Stuxnet (“To Kill a Centrifuge”), he states that the entire Natanz control system down to the controller level was altered to wreck the centrifuges used for the Iranian nuclear enrichment program. The malicious code “decoupled” the legitimate code during the preprogrammed attack on the centrifuges. The malicious code replayed 21 seconds of history and masked the actual values from the legitimate logic. Langner even suggests that certain pressure probes that would have monitored overpressure, and tripped the system as protection, were de-calibrated by the attack code to mask the need for pressure venting as long as possible. In an environment where the control system has the final say on what is wrong within a process, the creators of Stuxnet used the system against the very engineers who were entrusted to fix it. |

The Lack of Security in Control Systems

Most commercial software now is built to enforce good security principles. For instance, when navigating to a banking website to make a transaction, there are multiple layers of security so that the bank has a reasonable belief that a user is authorized to make a transaction.

Industrial control systems don’t possess these mechanisms. An entity that can communicate with a control system can make changes that should be reserved for operators and engineers. The only limiting factor is how difficult the learning curve is when learning to speak to the control system. Lack of security was built in during original development, and it hasn’t changed for most control products.

For example, Project Basecamp was a Digital Bond project in 2011–2012 to evaluate and catalog the security vulnerabilities present in several programmable logic control (PLC)–based controller platforms. What was found was expected: The systems under test had numerous issues that an attacker with communications could exploit to alter a process.

Digital Bond researchers found that configurations could be changed or their firmware altered. Many systems had undocumented features and accounts that gave new privileges when accessed, and several systems could be easily crashed with a single command.

The conclusion was clear: Control system development has not kept pace with modern cybersecurity issues.

Compounding the lack of security in control systems is the tendency for those systems to fail when exposed to network traffic and data that is common on corporate networks and the Internet but that exceeds the original design.

Experience points to a single conclusion: Malicious programs or people who can communicate with a control system are able to make changes, operate, or crash a system entirely.

Establish a Perimeter

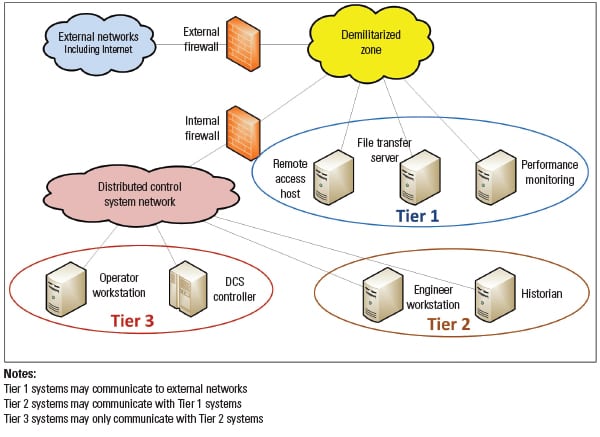

Because of the vulnerability of control system software and hardware as it stands today, a strong network perimeter is vital (Figure 1). There are three goals when establishing a perimeter:

- Prevent external entities from accessing the control system networks and devices (see sidebar “Example of Excessive Automation Capability and Risk Reduction”).

- Prevent control system networks and devices from accessing external entities.

- Limit access, in cases where access to or from control systems networks and devices is required to meet a valid business need, to the minimum capability necessary to get the job done.

| Example of Excessive Automation Capability and Risk Reduction

An engineer at a coal mine-mouth plant has identified that her distributed control system (DCS) has a Modbus connection to a coal-handling facility, run by a separate mining subsidiary. This is an external connection, as the responsibility and accountability for the mining subsidiary is not directed toward the reliable production of power. The connection feeds necessary operational data into the DCS regarding coal volume, and it can’t be removed. When evaluating the Modbus connection, the engineer discovers that while the handling facility only uses a small subset of points, the connection allows access to a chunk of points the handling facility staff have no need to access and that could cause operational issues. Recognizing that the mining facility has very little responsibility and accountability for power plant operations, she recognizes that the extra points are a risk to her operation. She takes steps to limit the points available via Modbus to only those necessary through the engineering application, and removes control points entirely. |

Though there is a tendency to focus on IT connections, don’t ignore automation connections. Automation connections are often a direct route to the internal workings of your system, and they are often configured for a lot more capability than is required for functionality. External automation connections are often done with protocols that are well-known to engineers and security professionals. Additionally, the methods used for these automation connections have changed over the years, with pure IP, Ethernet to serial converters, and serial servers becoming good options for reliable communication but coming with an additional risk as well.

Prioritize Patching for Exposed Systems

In my experience, generation engineers are concerned about the risk of patching systems. The reasoning is that patches can often introduce unknown elements to what is a functioning system. This view is supported by anecdotes of patches that have negatively affected operations. Additionally, patching comes with additional manpower and testing costs, ones that should be evaluated before undertaking a patch.

Not patching runs a similar risk as not fixing a piece of equipment when there is a known problem. For instance, if a pump has a tendency to overheat, the decision might be to limit usage and to watch closely for problems.

However, a “watch and see” approach in cybersecurity is based on a faulty assumption that tools and personnel are available who can recognize a cybersecurity compromise at the same level as they can diagnose and troubleshoot an overheating pump. Even many security professionals can have difficulty recognizing malicious code in the wild without considerable experience and investment. This level of capability simply does not exist at most generation plants, and it is not a core function of making electric power.

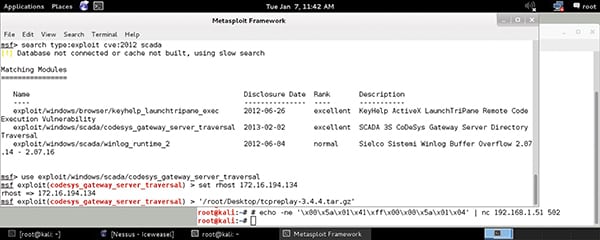

Unpatched systems are vulnerable to simple exploits, many of which have point-and-click usage. Without periodic patching, systems would remain vulnerable forever, awaiting a motive to compromise. Patching every time a vulnerability is discovered is a worthy goal but is not appropriate for a generation environment. What is needed is a more intelligent process that patches based on risk and that limits risk to the control system.

Prioritized patching is an approach that ensures that systems with the most exposure to the outside world are patched first, and other systems follow on a set schedule. No matter what, control system engineers should set the patch schedule for their control systems and never patch a system when it can affect operations. Digital Bond has used three tiers:

- Tier 1: Patch systems with external connections as soon as possible after testing.

- Tier 2: Patch systems that can communicate with Tier 1 systems during biannual outages.

- Tier 3: Patch everything else at least annually.

Ideally, Tier 1 systems are those systems that would not have an impact on operation. Specifically, these would be things like external-facing historians, performance-monitoring systems, remote access, and human-machine interfaces (HMIs) not used by operators. Tier 2 systems would be interface systems, those responsible for passing data to and from Tier 1 systems. They would ideally not have a major control function, and should be patched on a scheduled basis. Tier 3 consists of critical devices and systems that, if compromised, could affect the production of electric power.

The risk of not patching everything in an IT environment would be that viruses could spread throughout the environment quickly. However, control systems are already so vulnerable that simply being on the same network as a control system would allow an attacker much more capability than using an exploit.

Say No to Indiscriminate Use of Technician Laptops

Laptops are the newest tools at a generation site. Turbine tuning is routinely done with a laptop and a set of sensors, and protection relays are configured as well. Most major control system vendors have standard software, and this software is installed on laptops from an ease-of-use perspective.

There is nothing inherently wrong with using a laptop to complete vital work, but laptops are not wrenches and screwdrivers. They are multipurpose, and they have the capability to affect operations that exceed the scope of work. Laptops in industrial environments tend to migrate between control system tasks and web browsing, email, and basic file storage. Switching between the outside world and the control system increases the risk of an outside influence on the process—and does so without any intent from the user.

Restricting laptops becomes an even more important policy when the technician laptop is owned by an outside contractor. That laptop has likely been to several other industrial sites. It may even have been connected to various Wi-Fi access points for Internet usage, and it probably isn’t getting the patches and anti-virus updates it should. Logically, allowing a previously Internet-connected laptop to plug in to a control system network is not a good risk-prevention strategy.

Laptops for control system use should be segregated from normal IT laptops and never used for IT functions like email and general web browsing. They should receive updates to anti-virus and patching. These laptops should be checked out for usage and have appropriate maintenance and training procedures, just like a tool that can have a detrimental effect on operations if used incorrectly. Laptops not owned and managed by the plant should never be allowed to connect without inspection, just like other specialized tools a contractor would bring on site.

Restrict Other Removable Media

CDs and DVDs have been the usual way that control system software is distributed to users, but use of USB drives for simple file transfers has exploded over the past several years in automation. All of these means of moving files from system to system are grouped under the heading of “removable media.” Backups of control systems are conducted using large removable media, vendors often bring a set of tools and scripts on USB removable media, and the configurations for many automation devices can be loaded directly from removable media.

The use case of removable media makes it very attractive for reliably infecting systems. Removable media is almost exclusively used to swiftly transfer a program or file that is immediately needed. Once on the system, it is run by user action. If that file/program is a virus or other malicious code, it is now resident on your control system, regardless of the individual’s intent. To modify a familiar safety quotation: That which must be done fast is never done securely (see sidebar “Incidents Involving Removable Media at Generation Sites”).

| Incidents Involving Removable Media at Generation Sites

From a historical perspective, USB drives have the public award for the most impact on generation operations. In October 2012, ICS-CERT Monitor (published by the U.S. Department of Homeland Security’s Industrial Control Systems Cyber Emergency Response Team—ICS-CERT, http://ics-cert.us-cert.gov) called out two specific instances of virus infection at generation plants involving USB drives. In the first case, a third-party technician infected a turbine control system with the Mariposa virus via a USB drive. The Monitor states that the Mariposa infection and cleanup delayed the restart of the plant by three weeks. The Mariposa virus is a botnet virus discovered in December 2008, which allows victim systems to be rented for use in conventional hacker attacks on the Internet. The primary tactic is conducting denial of service attacks, where significant network traffic is directed at a target from multiple infected systems, crashing the target. Effective detection and prevention (via anti-virus) has been available for Mariposa since early 2009. If the Monitor article is accurate about the three-week delay being a result of the virus, this is three weeks of lost revenue due to a fully detectable and preventable issue. The second incident involved an employee who was backing up two engineering workstations and inadvertently used an infected USB drive. ICS-CERT does not call out the malware found, but once again, updated anti-virus found it upon a scan via the company’s IT department. Here too, regardless of individual intent, the control system was compromised by an easily detectable and preventable virus. |

Removable media should never be directly connected to control systems without a rigorous inspection process. The inspection process should make use of anti-virus and should include a means to verify that vendor software is unaltered. Vendor software on removable media should come directly from the manufacturer, with no potential for added files. Removable media used for control systems should be reserved for control system use and never used on noncontrol systems.

Use Anti-Virus

In generation, we make extensive use of protective relaying systems to detect known fault conditions and respond to those fault conditions by taking prescribed action before damage to equipment occurs. Protective relays are used everywhere because of regulations, and because the calculus of risk is simple: A single event has the potential to harm a significant capital investment and lose revenue, and the cost of the prevention via protective relay is far less than the consequence of not preventing it. Protective relaying isn’t perfect, but it is significantly better to operate with it than without it.

Anti-virus is the protective relay concept applied to computer systems and processes. Anti-virus continuously monitors for known cybersecurity conditions and halts the activity before it can damage the system. Anti-virus will not protect you from new or unknown conditions, but it does provide good protection against what has already been found and classified. Not all control systems can use anti-virus, due to age of the operating system, but all should investigate its usage as a good control.

There has been discussion about anti-virus between generation professionals over the past decade. Many see it as a drain on computing resources, as the protection requires a certain level of processing power and memory to be effective. Additionally, there is a risk of a false positive, where the anti-virus flags a valid process as a threat and shuts it down. As a false positive could be a vital control system process, this is a risk that must be considered during the testing of new signatures and during updates of programs.

However—to reuse the protective relay example—relays may also experience false positives, and the consequences of a relay misoperating can be equally nasty. Fundamentally, the risk of misoperation was determined to be less than the risk of operating without protection, and procedures were put in place to test settings. The same type of procedure is necessary to minimize the negative aspects of using anti-virus.

Major DCS and control system vendors in electric power have endorsed the use of anti-virus (though they generally require you use the anti-virus product they have tested with their software). Consult with your vendor on anti-virus that can be used on your system, what the common activities are, and make sure you cover your corner cases (such as OPC servers or other software that is not part of the vendor testing requirements).

Get a Third-Party Cybersecurity Risk Assessment

Once the basics above have been covered, it’s time for a more in-depth assessment of your cybersecurity risk. A third party should be consulted to see what other measures may be appropriate to your environment and the level of risk they would remove. Ideally, the best time to conduct an assessment like this is during an outage, coordinated with all the other work that will be conducted on site.

There will be findings in this third-party assessment, but the key is to identify that your initial controls have been configured appropriately and to look for other risks that should be addressed. And, as noted above, access to the control system network should not be given to the third party without a plan to ensure that systems and scripts will not cause production issues. The same tools and techniques used by security assessors can crash a system just as easily as a malicious attacker can.

Numerous suppliers exist to provide cybersecurity services, but there are fewer that specialize in control systems and generation in particular. Control system vendors also offer this service, but many also sell products to “solve” the same cybersecurity problem. Try to get a team that has some experience in industrial environments, as they are most aware of risks, and discuss the risks and rewards carefully.

The report should include a list of specific cybersecurity findings from the assessment, an appropriate rating of the risk each poses to operation, and general steps to fix the problem.

Security Work Is Never Done

Most cybersecurity incidents aren’t Stuxnet; they are mundane in nature. There is consistent research on threats being done by numerous companies around the world. Having a good cybersecurity program that limits normal risk allows for the opportunity to find more malicious and targeted viruses that may target generation systems.

For this article, I was asked to provide good practices for cybersecurity in generation, irrespective of NERC CIP compliance concerns. If you are a NERC CIP plant, these practices will likely aid your compliance program, but they will not make you compliant on their own. The intent of these recommendations is to provide efficient risk reduction from cybersecurity events, answering the question “Where should I put my next dollar in order to get the biggest cybersecurity improvement?”

There will undoubtedly be discussions of controls that I’ve “missed,” or other prudent controls that should take precedence before the ones discussed in this article. These discussions are valuable, because they provide different perspectives on the problem of securing generation control systems. The practices in this article are not meant to be the final say, but they should provide a place for concerned operators of generation facilities to start. ■

— Michael Toecker, PE ([email protected]) is a cybersecurity consultant, engineer, and White Hat Hacker for Digital Bond Inc. (www.digitalbond.com) specializing in the cybersecurity of industrial control systems, predominantly those used in power generation.