Ensuring the Cybersecurity of Plant Industrial Control Systems

Industrial control systems (ICSs) manage global industrial infrastructures, including electric power systems, by measuring, controlling, and providing a view of control processes that once were visible to the operator but now are not. Frequently, ICSs are not viewed as computers that must operate in a secure environment, nor are they often considered susceptible to cybersecurity threats. However, recent cybersecurity failures have proven these assumptions wrong.

Industrial control systems (ICSs) are commonly used throughout global industrial infrastructures. The fundamental reason for securing ICSs is to maintain the mission of the systems, be it to produce or deliver electricity, make or distribute gasoline, provide clean water, or enable other vital societal functions. We may not be able to fully electronically secure ICSs from every possible vulnerability. However, we can make them much more secure than they are today.

Secure ICSs will minimize the possibility of unintentional incidents like those that have already cost hundreds of millions of dollars in damages and taken a number of lives. The three case studies discussed later in this article demonstrate the difficulties of designing a cyber secure environment for ICSs.

What Are ICSs?

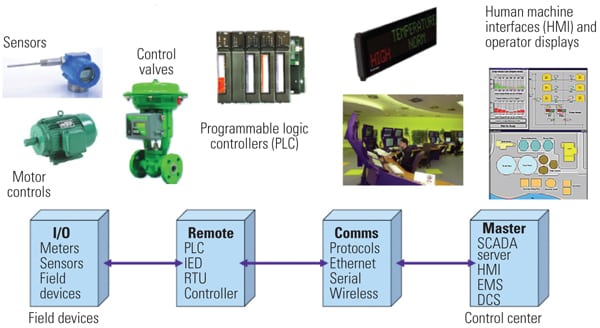

ICS networks and workstations, including the human-machine interface (HMI), are generally similar to information technology (IT) systems and may be susceptible to standard IT vulnerabilities and threats. Consequently, IT security technologies and traditional IT education and training processes apply. But that’s not all. Field instrumentation and controllers generally do not use commercial off-the-shelf operating systems and are computer resource constrained. They often use proprietary, real-time operating systems or embedded processors. These systems have different operating requirements and can be impacted by cyber vulnerabilities typical of IT systems as well as cyber vulnerabilities unique to ICSs (Figure 1).

|

| 1. Control system basics. This diagram shows the different aspects of an ICS using a Windows-based workstation with secure field elements but with generally little to no security in the overall control system. Source: Applied Control Solutions |

Infrastructure owners continue to upgrade their ICSs with advanced communication capabilities and network connections to improve process efficiency, productivity, regulatory compliance, and safety. This networking can be within a facility or even between facilities that are continents apart. Regardless, with increased system complexity comes greater system vulnerability, and few qualified experts have a holistic view of the complete system. When an ICS does not operate properly, the results can range from inconsequential to catastrophic.

Securing ICS and IT Systems

A properly secured industrial system requires one part physical security, one part IT security, and one part ICS security to properly function. Physical security is generally well-understood and is often addressed by experts coming from the military or law enforcement. IT security typically deals with commercial off-the-shelf hardware and software and connections to the Internet, with experts coming from IT and the military. ICS security, in contrast, is an engineering problem requiring engineering solutions. Resilience and robustness are the critical factors in the survivability of compromised ICSs.

ICS security requires a balanced approach to technology design; product development and testing; development and application of appropriate ICS policies and procedures; analysis of intentional and unintentional security threats; and proactive management of communications across view, command and control, monitoring, and safety. It entails a lifecycle process, beginning with conceptual design and ending with retirement of the systems. In other words, ICS security is not only more difficult to design than physical and IT security, but it also requires an added level of expertise not usually held by physical and IT security experts.

The triad of confidentiality, integrity, and availability (CIA) effectively defines the attributes needed for securing systems. In the IT domain, cyber attacks often focus on the acquisition of proprietary information. Consequently, confidentiality is the most important attribute, which usually dictates that encryption is required.

However, in the ICS domain, cyber attacks tend to focus on the destabilization of assets. Moreover, most ICS cyber incidents are unintentional and often occur because of a lack of effective message integrity and/or appropriate ICS security policies. Consequently, integrity and availability are much more important than confidentiality in ICSs, which lessens the importance of encryption and significantly raises the importance of authentication and message integrity. That is why ICS security research and education should focus on technologies that address integrity and availability over confidentiality.

Differences Between IT and ICS Systems

Cybersecurity in the U.S. is generally viewed in the context of traditional business IT systems and Department of Defense systems. IT systems are “best effort” in that they complete the task when they get the task completed. Unlike IT systems, ICSs are not general-purpose systems and components but are designed for specific applications. The ICS design criteria are performance and safety, not security. ICS systems are “deterministic” in that they must do their jobs immediately; they cannot wait, because later is too late.

Legacy ICSs were not designed to be secured or easily updated. Nor were they designed to enable efficient security troubleshooting, self-diagnostics, and network logging. A security—not a design or safety—flaw was exploited by the Stuxnet virus. (Enter “Stuxnet” in the search box at https://www.powermag.com for POWERnews coverage of related events.) The same flaw exploited by Stuxnet is inherent in all of the programmable logic controller (PLC) designs and cannot be easily fixed. It was never a problem because it did not affect performance or safety. This leads to a double negative conundrum for the IT security practitioner: When it comes to security, control systems don’t do what they weren’t designed to do.

The table compares key characteristics of business IT systems and ICSs. These differences can have very dramatic impacts on ICS operation and education.

|

| Comparison of typical IT system and ICS characteristics. Source: Applied Control Solutions |

Unfortunately, the distinctions between IT systems and ICSs are not recognized by regulators and politicians, and the consequences of this are grave. The smart grid initiative is already providing real case histories of what happens when those without an understanding of the operational domain try to set the rules for systems they do not understand.

The smart grid today is actually two domains “bolted together.” The first is the “smart home,” including smart meters and (in some cases) home area networks. This is where the majority of smart grid security efforts are being focused by industry and the National Institute of Standards and Technology smart grid program. The second piece is the electric grid and power plants. There is significantly less research and investment focused on these critical facilities.

The Need for ICS Experts

Figure 2 characterizes the relationship of the different types of special technical skills and certifications needed for ICS cybersecurity expertise and the relative quantities of each at work in the industry today. Most people now becoming involved with ICS cybersecurity typically come from a mainstream IT security background rather than an ICS background. This trend is certainly being accelerated by the smart grid initiatives, where the apparent lines between IT and ICS are blurring. Many of the entities responsible for control system cybersecurity, industry, equipment suppliers, and government personnel do not fully appreciate the difficulties created by this trend.

As can be seen in Figure 2, IT encompasses a large realm, but it does not include control system processes. The arrows indicate that most people coming into the ICS security domain (from academia and the workforce) are coming from an IT background. This needs to change. It does not take “rocket science” to compromise an ICS; however, it does require multiple, specialized skills to adequately protect an ICS while ensuring that it can perform its functions—which is what an ICS security expert does. Arguably, there are fewer than several hundred people worldwide who fit into the tiny dot labeled ICS security experts.

|

| 2. Why so few experts? The number of people with ICS cybersecurity expertise is much smaller than the number of IT experts because the level of expertise required is much higher. Source: Applied Control Solutions |

There are many explanations for this imbalance. First, there are simply more trained IT security personnel than ICS security personnel. However, now that some money is available for securing critical infrastructure, that may soon change.

Also, recall the old adage: “To a carpenter, everything looks like a nail.” As ICS systems get more of an IT look, IT people view them as IT systems and believe they are qualified to work on ICSs.

Finally, there is often little funding for training ICS personnel in cybersecurity, as plant operations managers often do not view this area as within their purview.

Together, the result is many more IT-trained security personnel than ICS-trained ones. The timing is ripe for the academic community to address the need to educate more ICS technologists, researchers, and real experts.

Recognizing the specialized nature of ICS cybersecurity is also necessary to foster needed research and development in the field. The ICS community understands what is required to perform a root-cause analysis of an incident and has developed detailed forensics for physical parameters such as temperature, pressure, level, flow, motor speed, current, and voltage. However, the legacy/field device portions of an ICS have minimal to no cyber forensic capability. This area is ripe for research and development to determine what specific types of forensics are needed and how they would be performed in the most non-invasive manner.

ICS Cybersecurity Incidents Have Happened

To date, I have been able to document more than 225 actual control system cyber incidents globally in electric power, water/wastewater, chemicals, pipelines, manufacturing, transportation, and other major infrastructures. Even though most of these incidents were not malicious, they caused considerable problems. Moreover, most of the unintentional events could easily have been instigated maliciously. Impacts of these events range from trivial to significant environmental discharges to significant equipment damage to major electric outages to deaths.

The following three case studies describe ICS cyber incidents that could just as easily have been caused by a system cyber attack (see the sidebar “Tip of the Iceberg”).

Fossil Plant Cycling Event. A paper was presented at the 2006 ISA Power Industry Conference in San Jose, Calif., entitled, “Optimizing Turbine Life Cycle Usage and Maximizing Ramp Rate.” Its purpose was to demonstrate how the Lower Colorado River Authority and its distributed control system (DCS) supplier were able to increase the ramp rate at the Sim Gideon gas-fired power plant to meet dispatch requirements (Figure 3). (See “Old Plant, New Mission,” in the June 2007 issue of POWER or in the archives at https://www.powermag.com.)

|

| 3. High-stress job. Lower Colorado River Authority’s Sim Gideon power plant overstressed a steam turbine when an unexpected external signal cycled the steam turbine multiple times. Courtesy: LCRA |

This project was motivated by a cyber incident. According to the paper, in April 2004, incorrect dispatching instructions were sent to the unit, and that unit alone carried out all the utility’s dispatch obligations for over 3 hours. The unit was ramped back and forth across its full load range at the maximum ramp rate.

The DCS effectively maintained the control variables within constraints. However, the utility’s engineering analysis of turbine metal temperatures determined that the rotor was subjected to significant stress. The GE SALI curves indicated that the cyclic life curve corresponding to 1,000 cycles was exceeded three times in that 3-hour period.

The event prompted strong concern about the impact of the fast dispatch on the turbine rotor cyclic life expenditure, so the maximum ramp rate was lowered to 18 MW/min (from 40 MW/min).

The event also uncovered the need for a first line of defense against erroneous dispatch instructions sent electronically to plant control systems that call for the unit to operate out of the design specifications of plant equipment. The reduction in ramp rate impacted the dispatch status of the unit and reduced revenue generation from the ancillary services market. The consequences of this event threatened to permanently damage the steam turbine and the unit’s viability to compete in the marketplace in the future with less-than-competitive unit ramp rate.

This unintentional cyber incident could have affected all plants in the region that were being dispatched by the system operator. There were no alarms, as the boiler controls were kept within limits and the steam turbine was not instrumented to alarm when operated outside of its performance envelope.

In this case, the SCADA system was the threat to the plant and could have caused catastrophic failures with potential loss of life.

Browns Ferry Nuclear Plant Broadcast Storm. On August 19, 2006, operators at Tennessee Valley Authority’s (TVA) Browns Ferry Unit 3 manually scrammed (shut down) the nuclear power plant following a loss of both main reactor recirculation pumps (Figure 4). The initial investigation into the dual pump trip found that the recirculation pump variable frequency drive (VFD) controllers were nonresponsive. TVA determined the root cause of the event was a malfunction of the VFD controllers because of excessive traffic on the plant’s integrated computer systems network, an event known as a “broadcast storm.”

|

| 4. Storm warning. TVA’s Browns Ferry Nuclear Plant experienced a “broadcast storm” of too much traffic on its integrated computer systems network that caused a malfunction of the reactor recirculation pumps and, consequently, a Unit 3 shutdown. Courtesy: TVA |

The Browns Ferry Unit 3 broadcast storm event was documented in Nuclear Regulatory Commission (NRC) Information Notice 2007-15, “Effects of the Ethernet-Based Non-Safety Related Controls on the Safe and Continued Operation of Nuclear Power Stations,” April 17, 2007.

The Browns Ferry Unit 3 broadcast storm is representative of many unintentional ICS cyber incidents that occur because of inadequate ICS design, inadequate ICS cybersecurity policies and procedures, and/or inappropriate testing. In this case, a bad electronic card, scanning of the ICS network, or communication problems due to the design could have caused the incident. This incident did not violate any IT cybersecurity policies, but the lack of ICS forensic capability made identification of the root cause of the failure impossible.

It should be noted that many non-nuclear facilities have experienced similar broadcast storms that have impacted the operation of power plants, refineries, and even energy management systems. However, there is no legal obligation to report these events to regulators, and few voluntarily report them.

Hatch Nuclear Plant Software Glitch. Southern Nuclear Operating Co.’s Hatch Unit 2 was operating at approximately 100% power on March 7, 2008, when all seven Unit 2 condensate demineralizers’ outlet valves closed at approximately the same time, resulting in a temporary loss of normal feedwater injection and automatic reactor shutdown (Figure 5).

|

| 5. Complex connections. A remote user made changes to software on the company’s business local area network that automatically updated Southern Co.’s Hatch Nuclear Plant’s operating software, causing Unit 2 to trip offline. Source: NRC |

The reactor shutdown was later determined to be the result of an engineer testing a software change on the plant’s chemistry data acquisition system (CDAS) server from a computer attached to the business local area network (LAN). The vendor software automatically synchronized changed data tag values between the server and the local control system vendor software. The test application sent data to a dedicated application server on the business LAN. This server was used in the CDAS to feed view-only data to users’ workstations on the business LAN. However, the CDAS server uses vendor software that communicated with the same vendor software running on the condensate demineralizers’ control PC. The CDAS server and local control computer were separated by a firewall.

The engineer did not realize that the vendor software automatically synchronizes data tags between connected computers running the software unless the data tags are written in a specific manner. When the local tag values in this vendor software on the CDAS server were updated by his code (not read-only, as they should have been), the changes were synchronized with the partnered vendor software running on the condensate demineralizer control PC. The updated values were subsequently sent to the PLC operating the demineralizers. Because the values written were zeros, the PLC switched to manual control with 0% flow demand. This action isolated the demineralizers, which resulted in a temporary loss of feedwater flow. An automatic low reactor water level scram was the result. Reactor water level was restored by the emergency safety systems.

As a result of this incident, the network connections between the condensate demineralizer control computers on both Hatch units and the CDAS server were physically removed. Other plant control systems were evaluated for similar interconnections. Only one similar interconnection was found, located between the Mark VI turbine control network and the safety parameters display system network on Unit 1. This connection was also physically removed.

The system design was also such that any rebooting that would reinitialize the system would cause the same failure, including software patch installs.

Note that the software failure did not violate an IT security policy, and the action could have been done maliciously. In fact, a similar failure occurred at another nuclear facility and other non-nuclear facilities, including one nuclear plant where the software failure impacted the safety systems.

Another similar case occurred recently in which control system logic was lost in all of the two-unit coal-fired plant’s DCS processors for approximately 3 to 4 hours. Only the presence of hard-wired analog safety systems prevented a disaster.

These and other case studies about cybersecurity breeches will be discussed at the upcoming 12th annual Industrial Control System Cyber Security Conference (see the first sidebar in this article).

— Joe Weiss, PE, CISM, CRISC, ISA Fellow, and IEEE Senior Member is the principal of Applied Control Solutions and the author of Protecting Industrial Control Systems from Electronic Threats, published by Momentum Press. Follow Weiss’ “Unfettered Blog”at community.controlglobal.com/unfettered for the inside story of the latest cybersecurity news.