Distributed Control Technology: From Progress to Possibilities

The past decade has seen an explosion of technology that has significantly altered the process control industry. The adoption of commercially available technology driven by desktop computing has allowed suppliers to focus on applications to enhance the process and deliver ever-greater value to the user.

Ten years ago at the 1998 ISA Power Industry Division symposium, several papers were presented that reviewed then-state-of-the-art developments in distributed control systems (DCS) technology. Those developments included the emerging trend to incorporate greater amounts of commercial off-the-shelf (COTS) technology into what had traditionally been highly proprietary, vendor-specific architectures. Specifically, those COTS components found in the desktop computing industry included personal computers (PCs) for DCS controllers and workstations, as well as commercially available networking technology such as Ethernet and fiber distributed data interface (FDDI).

New Designs Emerge

Although the DCS platform is sure to continue evolving to track the desktop computing industry, the significant developments will be in the ability to apply more-sophisticated applications that take advantage of the ever-increasing speed, power, and flexibility those platforms will provide.

We also have seen the emergence of control system technology that widely incorporates elements of conventional desktop computing technology. From operator workstations to process controllers, networks, and various operating system elements, the process control industry has embraced standard desktop computing and adapted its technologies to the unique needs of industrial control applications. DCS technologies will continue to expand in capability through the incorporation of "open system" technologies.

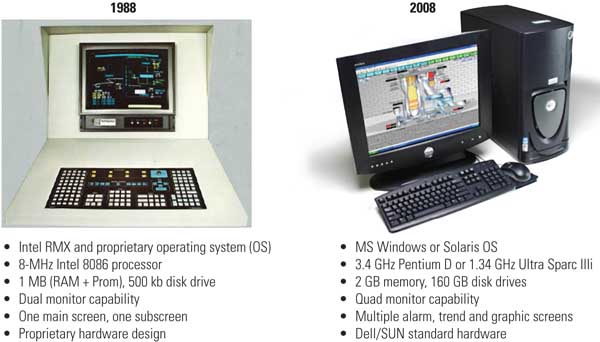

The first move in this trend began in the early 1990s with the gradual incorporation of UNIX workstations and, to a lesser degree, PCs for human-machine interface (HMI) functions. Though some were initially leery of applying these COTS technologies in mission-critical control applications, the apps gradually gained acceptance (Figure 1).

1. Pushing the limits. The processor speeds of human-machine interfaces have increased by a factor of 425, and memory has increased by more than a factor of 1,000, over the past 20 years. Source: Emerson Power & Water Solutions

Through the 1990s, as computing power, speed, and reliability in both UNIX and PC technology increased at geometric rates, users increasingly embraced COTS desktop devices for HMI functions instead of proprietary vendor-specific HMIs. Whereas a decade ago the UNIX workstation was the most common choice, primarily due to the perception that it had a more robust operating system, today the vast majority of users are opting for the more familiar Windows PC for HMI applications.

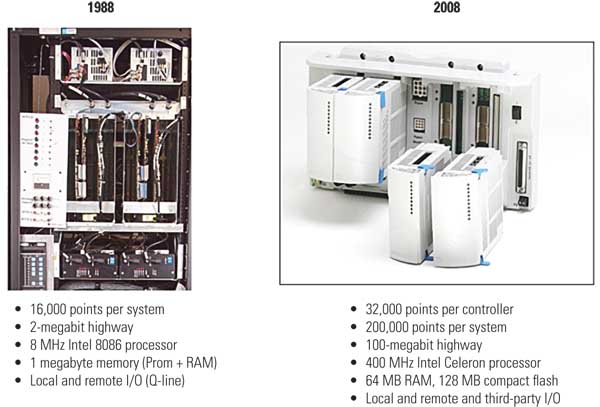

Also in the 1990s, the rapid growth in desktop computers’ microprocessor power and speed led to the next logical evolution in control technology. Control system suppliers adopted these developments and moved away from highly proprietary "unique" controllers and architectures. They began incorporating controllers utilizing PC architecture, albeit an architecture adapted to the redundancy, fail-safe operation, and environmental hardness demands of industrial control applications.

Although they are not strictly using COTS boards for controllers, DCS providers do use standard commercially available components and architectures — but on custom-designed boards to meet the demands of the industrial control environment. Since they were first introduced in the late 1990s, these "PC-based" controllers have been able to seamlessly track the more than tenfold increase in processor speed, offering system designers and users significantly more options than in the past.

The DCS network, or data highway, is the third area where commercially available technology has forever changed the process control industry. A decade ago, DCS data highways were highly proprietary architectures designed to facilitate communications only between DCS components from one specific vendor. There were no standard architectures; some highways were completely vendor-designed, while others were loosely based on standards, but those standards were unique to their particular system. Communications outside the highway were difficult and required custom data links to be developed, often at great expense.

Again from the desktop computer industry, two de facto communications standards arose: FDDI and Fast Ethernet. Both operated at 100 Mbps — 10 to 50 times faster than the proprietary DCS networks of the day. And though neither offered the deterministic features that most DCS networks provided, their speed and overall high level of reliability made them more than adequate for industrial control applications (Figure 2). They also had the benefit of more easily opening the system and making interconnection with third-party devices and corporate information networks far more practical than with a proprietary DCS network architecture. Over the past decade, the Fast Ethernet architecture has gained market dominance and has been joined by an even larger, Gigabit Ethernet standard that has great applicability in multiple network DCS architectures.

2. Much-improved performance. The processing speed of logic controllers has increased by a factor of 50 since 1988. Source: Emerson Power & Water Solutions

Many Possibilities

Today’s DCS technology not only performs its primary regulatory control function as well as or more reliably than its proprietary predecessor, but by incorporating commercially available technology, it also enables far greater flexibility.

An example of this flexibility is in simulation. In the past, if a user wanted a simulator as a training tool for operators, the only option was to acquire controllers and workstations identical to those employed in his system. Over time, with hardware upgrades or system expansions, the only way to keep the simulation realistic was to invest in duplicate hardware for the simulator. With the adoption of PC architecture for DCS controllers, it is now possible to create a virtual simulator, where the actual DCS application software can reside on a desktop PC and one PC can emulate up to 20 DCS controllers. This makes the simulator easier and less expensive to maintain, resulting in a far more flexible and valuable asset.

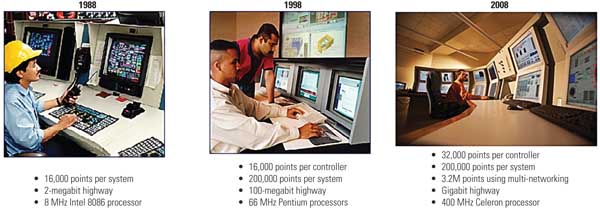

Along with that inherent flexibility of the modern DCS platform is the vastly increased computing power of current computer technology that offers a host of enhancements that have altered the nature and expectations of plant operations. Traditional functions such as process trending, alarming, logging, and historical data collection have become not only easier to accomplish but also easier to share beyond the control room, making the DCS an integral part of the corporate IT infrastructure. With enhanced data collection, management, and analysis capabilities inherent in a more-powerful platform, opportunities for process improvement within a unit, a plant, and even a fleet become easier to identify and to implement (Figure 3).

3. More zeros over time. The evolution of the DCS. Source: Emerson Power & Water Solutions

Smart Computing

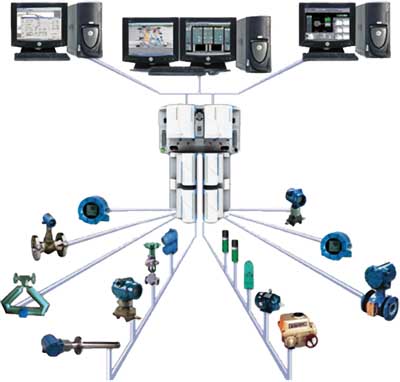

The DCS platform is not alone in capitalizing on the advancements driven by the desktop computing industry. Over the past decade, low-cost, yet powerful, microchips have become fully integrated components in field devices such as transmitters and actuators. Among other features, these "smart" devices can measure and report more than one variable from the process while also providing that data at much higher resolution than is possible with conventional field devices. In addition, they constantly perform self-diagnostics and report on their health, alerting operators to emerging problems before they affect the process.

These smart devices can exist on conventional 4-20 mA twisted pair, or on a fieldbus network that allows multiple devices to reside on a single digital communication bus, as opposed to the older home-run concept of "one device, one wire." Fieldbus architecture enables significant savings in wiring costs for new plants or new control areas over the high cost of traditional device wiring.

Smart devices can also be wireless. In the past several years, wireless field devices have proven themselves in a number of applications, where they enable the gathering of direct process measurements from remote locations without the expense of wiring.

A similar revolution is taking place in diagnostics technology, which was once reserved for major capital equipment. Today, the cost of diagnostic and monitoring devices such as heat or vibration monitors has decreased significantly, making it cost-effective to install them to closely monitor the performance and health of critical plant equipment and to identify negative trends before they affect operations.

The additional wealth of data from field sensors, actuators, and diagnostic equipment leads to another significant development: plantwide asset management systems as an integral component of the DCS architecture. This plantwide asset management concept goes beyond the traditional DCS status alarm concept and allows for detailed and coordinated analysis of plant assets and operations permitting proactive, not just reactive, response to plant conditions (Figure 4).

4. Emerging trends. DCS technology will continue to evolve in response to technology advances such as integrated simulation, high-performance digital bus architecture, wireless applications, cyber-security concerns, and more-capable and robust software applications. Source: Emerson Power & Water Solutions

Another trend that has emerged in the past decade that will grow in importance with the availability of a rich stream of data is intelligent process optimization. Utilizing advanced mathematical techniques such as fuzzy logic, these "smart" applications seek to continuously track actual plant operating conditions, learn as they accumulate experience, and then adjust process setpoints to optimize production based on a defined goal. Such advanced techniques have already been successfully employed in a number of areas, such as NOx optimization, where they help utilities balance emissions against limits or credits available. Currently, even more advanced mathematical models are being applied that take optimization even further, including models that mimic biological responses, such as immune system response.

Along with all the benefits and increased capability of open-system technologies come increased demands for managing those systems. Most significant among those demands is the requirement for increased attention to system security. Although the North American Electric Reliability Corp. Critical Infrastructure Protection standards provide a framework for system security efforts, is vital that users and suppliers work together in implementing security programs that prevent both intentional and unintentional threats to system integrity.

–Robert Yeager ([email protected]) is president of the Power & Water Solutions division of Emerson Process Management.