As AI demand accelerates, the race is on to bend the power curve before it bends the grid.

The first electric refrigerators were mechanical curiosities—loud, bulky appliances that consumed staggering amounts of electricity. But they spread anyway, because the productivity gains were too great to ignore. Daily habits shifted. Food systems reshaped. Household labor changed forever. And even as adoption soared, the machines themselves kept evolving: better insulated, more efficient, and less environmentally harmful.

The industry’s turning point, driven in part by the 1970s energy crisis, forced a wave of innovation that permanently altered the trajectory of refrigerator energy use. By the time federal standards arrived in 1978, efficiency was no longer a nice-to-have. It was existential. A century later, modern refrigerators use a fraction of the electricity of their predecessors—proof that breakthrough technologies don’t freeze in time. They mature. They reinvent.

Something similar is now happening inside the world’s data centers, particularly the ones powering today’s artificial intelligence (AI) boom.

A New Kind of Always-On Infrastructure

Data centers, like refrigerators, became essential long before they became efficient. They are always on, everywhere, and increasingly impossible to live without. Even as early facilities ballooned in size and energy draw, the demand for digital services grew faster still.

But the past decade has shown that efficiency isn’t merely possible, it can be astonishing. Between 2010 and 2018, global data center electricity use rose by roughly 6%. Over the same period, computing workloads increased more than 550%. Very few sectors compress that much growth into that little energy.

Today, the push is even stronger, driven by the compute demands of large language models and the hyperscalers building the infrastructure to run them. Google says its newest model-training techniques can reduce energy use by up to 100 times compared with practices from just five years ago. The company’s latest Tensor Processing Unit (TPU), codenamed Ironwood, is nearly 30 times more power-efficient than its first Cloud TPU. And inside its facilities, Google reports that its data centers now deliver about four times as much compute per unit of electricity as they did half a decade ago.

For an industry under scrutiny, the message is clear: efficiency isn’t an afterthought. It’s a competitive advantage.

A Growing Load, Even with Sharper Tools

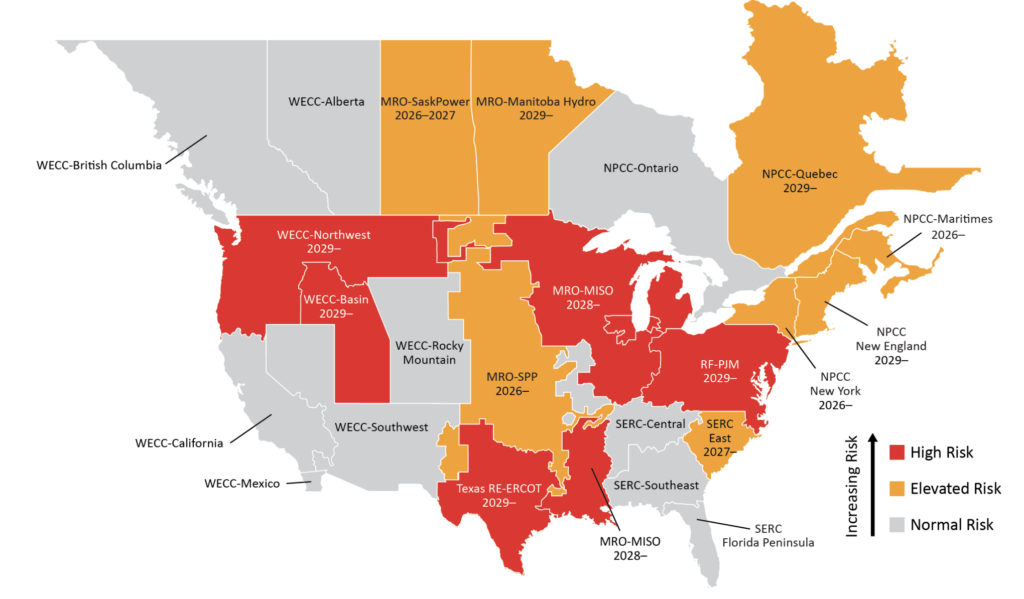

But efficiency doesn’t erase demand. Just as shrinking refrigerator wattage never slowed the spread of refrigeration, more efficient data centers won’t be enough to hold power demand steady. AI’s appetite for computation—and the value people hope to extract from it—is expanding fast. Several U.S. grid operators are now warning of demand curves that could rise faster than planned generation, forcing early conversations about grid expansion, new transmission, and reimagined interconnection processes.

For utilities, regulators, and developers, the question is no longer whether AI will reshape power systems. It’s whether the energy sector can keep pace.

This moment echoes the refrigerator’s inflection point: a technology too valuable to slow, but too energy-intensive to ignore. And once again, innovation is being reframed as a necessity, not an optional upgrade.

The Changing Role of the AI Data Center

One of the most significant emerging shifts is the realization that AI data centers may no longer function solely as passive energy consumers. Their computational flexibility allows them to dynamically modulate workloads in response to real-time grid conditions. Many facilities are already equipped with on-site batteries and sophisticated control systems—assets that can deliver grid services, shift demand, and absorb excess renewable generation in ways conventional loads cannot.

If those capabilities scale, AI data centers could function as flexible participants in the grid rather than unidirectional sinks of electricity. That flexibility could act as a pressure valve during periods of strain, or a stabilizer during periods of excess generation. It’s not a universal solution, but it’s a meaningful one.

The next era of AI infrastructure may be defined as much by grid integration as by silicon innovation.

The Stakes Ahead

The refrigerator story offers a powerful reminder: efficiency gains compound. What starts as incremental engineering improvements can eventually transform an entire sector. But the analogy comes with a warning. Refrigerator adoption happened over decades, giving manufacturers and policymakers time to adjust. AI’s rise is happening in single-digit years. The grid doesn’t have the luxury of moving slowly.

That doesn’t mean the future is bleak. It means the future depends on making the right bets today. Investments in cleaner generation, flexible loads, more efficient chips, and redesigned facilities will determine how far the grid can stretch to support the next wave of AI development.

Like refrigeration, AI compute is becoming foundational infrastructure. And as history shows, foundational technologies don’t just scale, they evolve. The challenge for this moment is to ensure they evolve fast enough to keep the grid resilient, the power affordable, and the benefits broadly shared.

The refrigerator teaches us that the arc of innovation bends toward efficiency. The question now is whether the AI industry, and the grid it depends on, can bend that arc before demand outpaces our ability to supply it.

—Sayo Folarin is a Mergers and Acquisitions Associate at The AES Corporation.