As artificial intelligence (AI) models and workloads continue to scale in size and sophistication, their hunger for processing power—and the energy that fuels it—is accelerating faster than any previous wave of digital innovation. The surge in compute demand is stretching capacity and driving up power consumption, while exposing the inefficiencies of legacy cloud and data center compute architectures. This presents one of the defining challenges of our time: how do we deliver the intelligence the world needs without outpacing the global energy transition?

COMMENTARY

Arm’s 2025 Sustainable Business report makes the case that sustainability, efficiency, and innovation are not separate goals, they are inseparable drivers of the next wave of compute. The path to sustainable AI is about intelligent innovation—making every cycle, every watt, and every connection count.

The conversation around AI is no longer about trade-offs between performance and energy use, it is about re-architecting compute—from cloud and data centers to energy systems and device designs.

Sustainability as a Driver of Innovation

Sustainability and technology innovation were once seen as separate ambitions, but the AI era has flipped this narrative. Efficient AI is viewed as a “catalyst for progress,” with power efficiency as the new frontier of competition that will accelerate AI growth and innovation.

There are increasing opportunities to redefine a new generation of low-power, high-performance compute designed from the ground up. These are not just delivering incremental gains, but represent fundamental redesigns of how compute happens, from milliwatt-scale edge devices to megawatt-scale data centers.

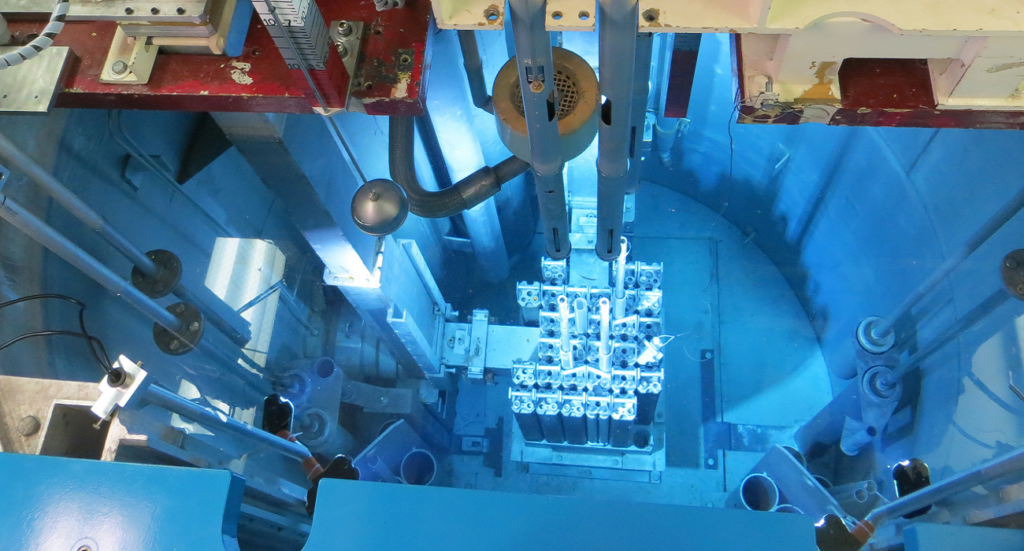

For example, at the edge, ultra-efficient processors are powering a variety of devices and systems—from smart cameras to industrial IoT—that deliver on-device intelligence with minimal energy consumption, while in the data center the rise of new computing architectures is maximizing performance-per-watt.

Across the industry, the push to make AI more sustainable is driving breakthroughs in:

- Advanced chip design and low-power compute architectures that form the foundation of power-efficient AI processing.

- Adaptive workload management for smarter energy allocation and optimizations to reduce waste.

- System-level efficiencies across hardware, software and data pipelines to ensure that every layer of the stack contributes to measurable energy savings.

- Circular design principles that extend hardware lifecycle and reuse value.

Such advances do not just reduce power consumption; they unlock new ways of delivering advanced intelligence.

The Strategic Role of Edge Computing

As the processing demands of the world’s data continue to grow, the need for advanced AI capabilities is not going away. Edge computing represents one of the most promising solutions to the energy requirements needed for AI’s growing demand. This is not replacing cloud computing, but rather complementing it. While frontier models will continue to train in hyperscale data centers, inference can increasingly happen closer to where data is generated: in sensors, devices, and factories.

The shift to efficient edge computing reduces overall energy consumption compared to moving data back and forth from the cloud, while also providing faster response times, enhanced data privacy, and reduced network dependency. The result: more capable, more efficient AI, as well as a stronger foundation for national competitiveness and resilience.

However, no one company can meet the AI efficiency challenge alone. The scale of the challenge demands ecosystem collaborations among technology companies, governments and research institutions. Joint approaches with industry and governments are recommended, where initiatives, like the CHIPS and Science Act and the White House AI Action Plan, support research that then feeds into the development and deployment of efficient AI infrastructure by industry.

The U.S. and other major economies are entering a new phase of competition where power efficiency equals strategic advantage. Efficient AI provides a path forward that can strengthen both innovation and energy security through having the potential to reduce the load on the national power grid, lowering operational costs for AI-driven industries, and enabling resilient, distributed intelligence across critical infrastructure.

Reframing AI’s Future

AI’s energy challenge is not a barrier to progress, it is the spark for a new generation of innovation. The industry is entering a new era of intelligent, efficient compute, where sustainability and competitiveness can reinforce each other to benefit business, society and the planet.

From edge to hyperscale, and silicon to systems, the opportunity is clear: build AI that is as efficient as it is powerful. The next era of AI will not be measured only in model size or performance, it will be measured by how energy resources are used to power the future.

—Maureen McDonagh is Head of Sustainability at Arm.