A key aspect of any digitization effort is providing easy access to data by engineers and other experts so they can perform advanced analytics to improve outcomes.

In the March 2018 issue of POWER, an article titled “Using Data Analytics to Improve Operations and Maintenance” notes that utility executives are “being bombarded with stories about other industries deriving value from big data.” As a result, C-level executives and plant managers are demanding and expecting more insights, faster, to drive improvements in plant operations and maintenance. The article says, “These improvements could be increased asset availability via predictive analytics, improving compliance through monitoring of key metrics, or simply greater productivity when accessing contextualized data as input into plans, models, and budgets.”

The question, though, is what does this opportunity—digitization, analytics, and improved operations outcomes—actually look like from a plant perspective? What does it mean in practical terms, and how will outcomes improve?

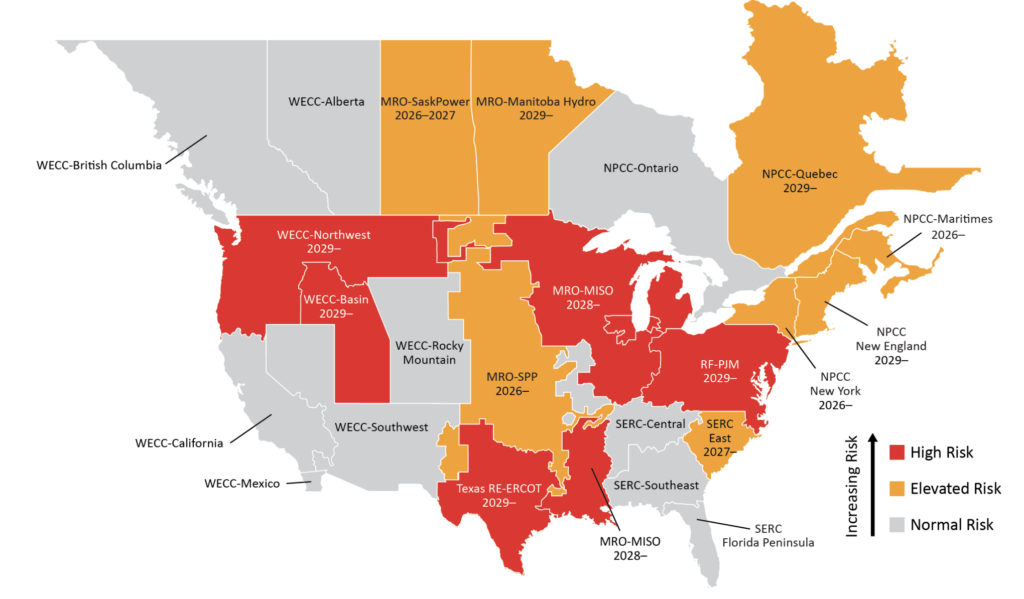

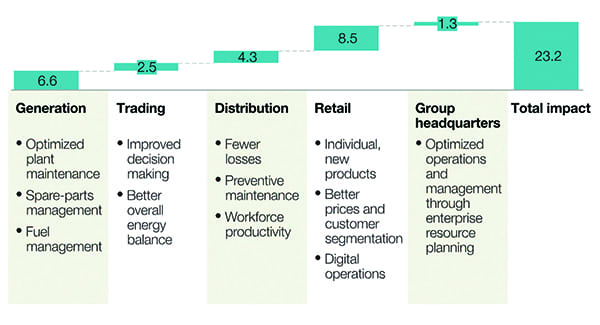

To set the stage for this article, consider a quote from a McKinsey&Company report titled The Digital Utility: New challenges, capabilities, and opportunities, published in June 2018. (McKinsey & Company is a management consulting firm with very strong analytics offerings for all types of process manufacturing industries, including power.) The report shows how digitization can positively impact electric utility earnings (Figure 1). The introductory sentence reads: “In 2010, the largest five companies in the world were Exxon, Apple, PetroChina, Shell, and ICBC [Industrial Commercial Bank of China]. Today, it is Apple, Google, Microsoft, Amazon, and Facebook. There is no doubt that digital is fundamentally changing the world and reshaping how companies and society operate.”

|

|

1. Digitization has a demonstrable impact on utility earnings. This graphic shows the percentage increase in earnings before interest and taxes that researchers found during a McKinsey&Company case study. Courtesy: McKinsey&Company |

An easy response to this transition in highly valued companies is to recognize the impact of the oil shock on valuations of large oil and gas firms. At the same time, the fact that companies born and grown from digital origins have assumed the mantle of the most valued companies cannot be ignored. Clearly, the future is digital, and value creation will increasingly be defined by insights enabled by software.

An alternative view is that power generation and distribution firms will be forced to embrace this transition by shareholders and customers, and even by employees in the war for talented digital-native college graduates. But either way, there will clearly be a new digital platform on which power generation and other companies will be compelled to operate their businesses.

There are many names for this new landscape—Industry 4.0, IIoT, Smart Manufacturing, Digital Factory, and others—but the common thread is digital, the main factor driving the valuation point of the top companies. There is also some irony, because utilities were in the first wave of companies using sensors, data collection, and control systems to manage and improve their operations. There was a time when they were at the leading edge of innovation and automation technologies. This is why we sometimes hear executives claim “there is nothing new in IIoT, we’ve been doing it for years.” But clearly, the mantle of innovation has passed from power generation to other leading industrial firms. Now, the objective must be to bring innovations from those other industries back to power generation to improve operational excellence.

Starting Backward: End-Users in the Digital Utility

If we take another look at the list of highly valued firms, they are all digital companies, but their other shared aspect is a focus on end-users. These are companies whose customers are consumers or perhaps office workers—businesses using Apple and Microsoft products, for example. What this means for power generation firms is that the starting point of digitization efforts will be at the end points, that is, the users on the front lines, which means the engineers and experts in power generation firms.

We see this already in handheld devices and mobility support, and easier web-based solutions for engineers to work with data. What are the origins of the wireless world, ease-of-use momentum in industrial settings, and web-based interfaces? It’s the consumer world, from cell phones and bring-your-own-device initiatives to the expectation that most of what consumers do on their computer is either online/web-based, or app-based on a smartphone. Even the online gaming world will contribute to this future with power plants using virtual and mixed-reality systems to visualize maintenance instructions as they work in the field.

What these examples lead to is a digital utility organized from the bottom-up to empower the employees closest to the assets, operations, and outcomes. This will inform and enable them using a variety of consumer-led innovations that have reached mainstream use.

What’s Behind the Insights: Advanced Analytics

The second aspect of a digital utility is assistance and acceleration powered by advanced analytics innovations generated by the efforts of consumer-minded companies like Google and Amazon. Consumers don’t have to be programmers to use Google or data scientists to use Amazon Alexa because these technology solutions are wrapped in easy-to-use experiences, and there will be similar experiences for employees in utilities using advanced analytics.

The key phrase is “assisted,” not replaced or automated, so an employee’s expertise and experience can be tapped and leveraged instead of ignored (Figure 2). There is a long and dismal history of attempts through computer science innovation—neural networks, advanced process control, artificial intelligence—to automate the employee out of the analytics process. But that hasn’t and won’t work because the specifics and changes in assets, feedstocks, demand, and other factors in power plant environments requires ongoing expertise from employees. Therefore, the priority needs to be accelerating the ability of employees to investigate, analyze, and act upon digitized data.

|

|

2. Seeq is designed for self-service use by process engineers and experts, with no requirement for assistance from data scientists and other IT personnel. Courtesy: Seeq Corp. |

This is the role of advanced analytics. Another McKinsey&Company article says, “Advanced analytics refers to the application of statistics and other mathematical tools to business data in order to assess and improve practices. In manufacturing, operations managers can use advanced analytics to take a deep dive into historical process data, identify patterns and relationships among discrete process steps and inputs, and then optimize the factors that prove to have the greatest effect on yield.”

Advanced analytics refers to the incorporation of big data, machine learning, pattern recognition algorithms, and other software innovations to improve predictive, diagnostic (root cause), descriptive (reporting), and prescriptive (what to do) analytics. This is a clear separation from existing “analytics,” a term so broad it often refers simply to visualization of data sets, as with the screens viewed by operators in a control room. Advanced analytics is on another level, allowing asset availability, yield optimization, regulatory compliance, and other key analytics of power production facilities to be easily used by any engineer or expert. The result is insights found sooner and executed upon more quickly to deliver improved outcomes.

Digital: Context Required

With employees and advanced analytics, there is the final step of enabling a digitally transformed operation by providing advanced analytic users with something to work on: the data. Today the most common adjective for “data” in many industrial settings is “silos.” The data is there, there is a lot of it—which is why most companies are data rich and information poor—but it exists in a variety of systems, with no clear way to standardize the data and access it easily.

There is asset and supervisory control and data acquisition data in the historian, maintenance data in the enterprise asset management or computerized maintenance management system, pricing data in other systems, and human resources records in an enterprise resource planning system. If a plant wants to compare shift performance on key metrics, for example, then someone typically needs to search for data across different systems to see if there is a correlation between which employees are working at any one time and outcomes.

Any of these data sources has a user interface, for example a trending tool for a historian, but a new level of digital performance requires access to see interactions among the data sources. “Data lakes,” a term for aggregating data of different types in one centralized database, or “digital twins” for testing and experimenting on an aggregated view of an asset, are two approaches to opening data up for investigation and analysis.

The key challenge of enabling access to data is the balancing act of providing widespread and easy access to data along with appropriate measures to ensure security and prevent cyber intrusion. Security and access control due to cyber threats can be an obstacle to analytics, and the details of execution to enable access while protecting infrastructure are well beyond the scope of this article.

Suffice to say an approach must be taken to provide easy access by engineers and experts to subsets of data, which they can use for analysis to improve outcomes. For example, optimizing maintenance programs for cost and asset uptime is a multi-factor problem, which requires access to multiple data sets.

Predictive Maintenance Improves Performance

Some specific examples of these elements working together to improve outcomes follow.

A power plant created a model with Seeq, an advanced analytics application for engineers, and can now identify declining performance in a specific asset and decide when to perform maintenance by weighing the reduced asset performance against the market value of the plant’s output. This digitization effort doesn’t just optimize for a process parameter or other metric, but instead makes real-time profitability the priority outcome, in effect the setpoint for a control loop. This type of asset optimization is applicable to a wide range of equipment.

In another power plant, operators knew their feedwater heaters tended to foul and lose efficiency in a predictable manner, but they were never successful in their efforts to quantify the process so maintenance could be optimized. Seeq analysis of the data regarding the corresponding boiler’s heat rate provided the information necessary to determine what effect a cleaning effort had on efficiency, to the extent of determining its specific value (Figure 3). Operators now optimize cleaning frequency based on this cost/benefit relationship.

|

|

3. Seeq was used to analyze declining performance of a feedwater heater and to make decisions regarding the timing of maintenance. Courtesy: Seeq Corp. |

These types of efforts use return on investment (ROI) as the controlling variable and can result in delaying maintenance to continue profitable production over the coming weeks or months. In other cases, it can mean taking the asset offline immediately to perform repairs. It all depends on the ROI analysis, driven by advanced analytics software.

It Starts at the Top

A digital and software-centric approach to innovation in power generation requires more than technology, it also requires executive support to establish an analytics culture. This enables an environment for empowered engineers to improve outcomes by using innovative software to access multiple data sources.

This is a non-trivial requirement and the gating element of many analytics initiatives, per the legendary management consultant Peter Drucker, who famously said, “Culture eats strategy for breakfast.” In practice, this means any digitization effort will fail if it has insufficient support for the digitization effort at the highest levels of management.

The McKinsey&Company report provides an example of how Pacific Gas and Electric (PG&E) addressed this issue to ensure innovation. The report says, “PG&E set up a digital center of excellence, which it called Digital Catalyst. This group, for example, sent digital specialists to shadow electricity and gas field workers for hundreds of hours to uncover ways of aiding their work. That method, based on design thinking, led the Digital Catalyst team to create a mobile app to help field crews complete asset inspections more efficiently and safely by furnishing them with real-time information.”

The future for utilities is the intelligent use of digital infrastructure and advanced analytics to enable improvements in the reliable, secure, and efficient delivery of power to their customers. These two initiatives must be enthusiastically supported by upper management, allowing full-fledged implementation at all levels of the company. ■

—Michael Risse is the chief marketing officer and vice president at Seeq Corp., a company building innovative productivity applications for engineers and analysts that accelerate insights into industrial process data.