Predictive maintenance (PdM) is transforming power plant operations by leveraging artificial intelligence (AI), data analytics, and automation to prevent costly equipment failures. Learn about real-world applications, challenges in adoption, and the significant cost savings achieved through advanced monitoring and proactive maintenance strategies.

In an era where power outages can ripple through communities and industries, causing significant economic impact and disrupting essential services, the reliability of power generation equipment has never been more crucial. Yet, across the fleet of aging power plants worldwide, maintenance teams are caught in a delicate balance between preventing catastrophic failures and avoiding unnecessary downtime for inspections and repairs. This is where predictive maintenance (PdM) is revolutionizing the industry’s approach to equipment health.

Gone are the days when power plant operators relied solely on scheduled maintenance intervals and reactive repairs. Today’s PdM technologies harness the power of sensors, data analytics, and artificial intelligence (AI) to detect potential failures weeks or even months before they occur. For an industry where unplanned turbine downtime can cost hundreds of thousands of dollars in lost generation production, this shift from reactive to predictive maintenance isn’t just an operational improvement—it’s a competitive necessity.

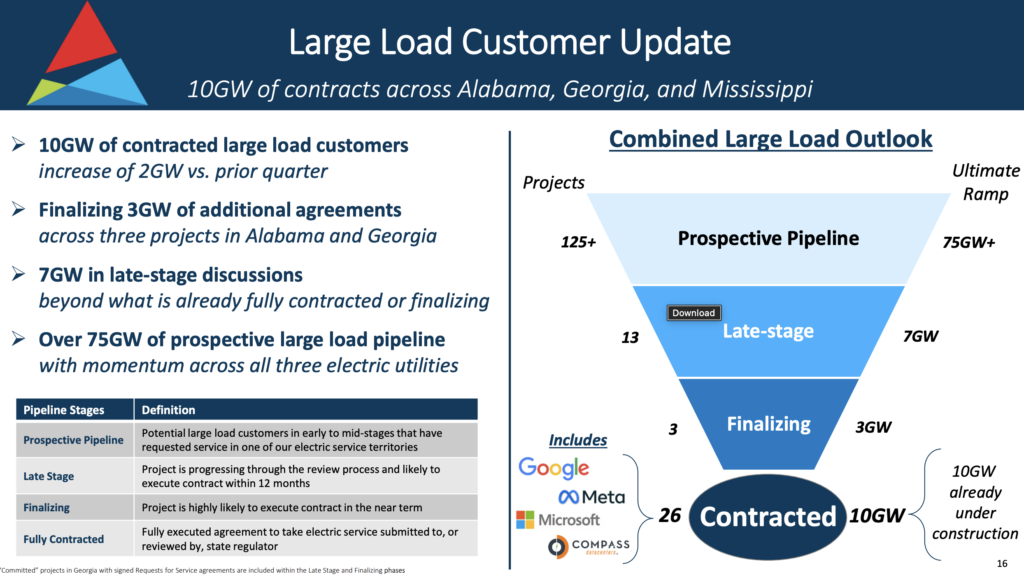

Consider this, at any given moment, a typical combined cycle power plant (Figure 1) has thousands of critical components operating under extreme temperatures and pressures. Each bearing, blade, and valve tells its own story through vibration patterns, temperature fluctuations, and performance metrics. By listening to these mechanical whispers and interpreting them through advanced analytics, maintenance teams can now predict and prevent failures with unprecedented accuracy.

|

|

1. A modern combined cycle power plant has literally thousands of sensors installed to monitor temperatures, pressures, flows, levels, vibrations, water quality, and more. Data from these sensors is vitally important to predictive maintenance programs. Source: Envato |

Combining the Right Tool with the Right Expertise

Orangeville, Utah–based Taber International is an engineering and automation consulting group that offers fully customized solutions in smart automation, optimization, data visualization, and process control. It works with existing hardware and systems, and also deploys new systems from the ground up, providing end-to-end software platforms.

The company was founded in 2006, and initially focused on combustion optimization and intelligent sootblowing applications for coal-fired electric utility generators. Over the years, it expanded its scope to include ancillary systems in thermal power plants and diversified into other industries including chemicals, manufacturing, water and wastewater, oil and gas, and others.

Taber has deployed applications within the Griffin AI Toolkit, a no-code interface and companion rapid prototyping run-time engine, which provides an excellent platform for improving maintenance practices. With these tools and Taber’s help, many power plants have replaced time-interval or “gut-feel” based maintenance approaches with condition-based solutions.

“Taber’s expertise in the power industry combined with the capabilities of the Griffin AI Toolkit provide insight into a wide range of conditions,” Jake Tuttle, CEO of Taber International, told POWER. “We’ve had success in recognizing condenser and heat exchanger fouling throughout the fouling process, keying into subtle performance degradation during the early stages of formation, allowing for minor corrective actions to be taken at much lower cost.”

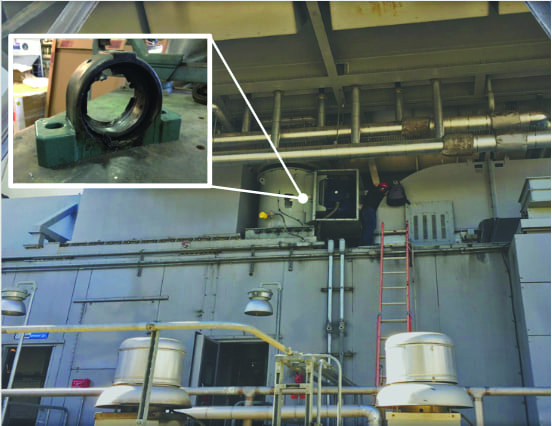

In other cases, Tuttle said the company has been able to recognize the immediate onset of tube leaks in steam power plants, before acoustic monitoring or other systems detected it. One such example occurred in a fossil power plant while load ramping. During one ramp from minimum to maximum load, the system recognized a change in operating state once the unit reached full load. No other systems reported any change. Eight hours later the tube had a massive failure and caused the unit to come offline immediately. Other examples include bearing fatigue in motors, sensor calibration and measurement errors, fan performance deterioration, oil and lubricant leaks, equipment filter pluggage, general performance degradation, mechanical stress failures, and more.

Challenges to Implementation

Yet, as game-changing as Taber’s technology is, it can be difficult to get a team of power plant managers, engineers, operators, and technicians to move beyond past practices. “The biggest customer challenges—or more accurately, perceived challenges—are usually their own available resources and trust,” said Tuttle. “Most customers feel they don’t have the personnel and the time to support development of a predictive maintenance program. There’s an assumption it takes multiple people multiple months or even years to get a good, reliable program in place. Customers become daunted when they realize just how much equipment and the scale of maintenance efforts they have at a site. On the trust side, it’s hard to break tradition and rely on a new system to perform on ‘your’ system, no matter how well it’s worked elsewhere.”

Among the other obstacles customers sometimes bring up is the fear of false alarms. “Many existing programs have experienced the situation where an alarm is always coming up that isn’t real or isn’t a concern, and the alarm just gets shelved or personnel become desensitized,” Tuttle explained. “Nobody wants to add to the list of alarms, especially if that’s going to add to the desensitization problem. It’s critical to avoid alarms being activated just because a threshold is crossed, but that alarms be verified by the behavior of the current conditions of the process, which is something we take major steps to achieve through customization and specific element-by-element deployment of the PdM program.”

Tuttle said Taber’s hybridized approach combined with its personnel’s experience only requires the site to retrieve some operating data (1 day), answer some questions about the different system processes they want to focus on (2 to 3 hours), and be available to answer some follow-up questions. Taber builds out the PdM program within its platform, then there’s a validation period where the system provides notifications and insight while the site continues its existing program. The site can monitor and compare the findings of both approaches for as long as it takes for the site to be satisfied that it’s seeing favorable results. And then the switchover can be finalized.

“We also very often work on an incremental scale, meaning we start with a small portion of the process or a few pieces of equipment. Building the PdM program out on this reduced scale only takes a few weeks total to be functional, then we move on to the next piece. Typically, the reduced scale feels more manageable to the site, and as these perform well, trust is developed in the system and the remaining steps move even faster,” Tuttle said.

Consistency Is Essential

At the heart of any successful PdM program lies a fundamental truth—the quality of your insights can never exceed the quality of your input data. Even the most sophisticated AI algorithms are fundamentally limited by the data they process. This is why establishing rigorous data quality standards and validation processes isn’t just a technical detail, it’s the foundation upon which all reliable PdM capabilities are built.

“As far as data quality, the key is that the data is consistently represented. Even if it’s noisy data, if it’s a consistent amount of noise, and it reflects and follows the process reliably, it can be integrated within the system,” said Tuttle.

“Similar to our approaches in automation and controls, we utilize a hybridized approach to system development, combining real-world process knowledge and systems experience with machine learning and AI, dramatically reducing the bulk data needs upfront. Doing this, with just a month or two of process data representing a ‘healthy’ component, we can begin reliably informing predictive and condition-based maintenance programs,” added Tuttle.

Implementations Vary

How a system is deployed often changes based on the scale of installation. Sites that want only a local deployment at a specific plant site inside a firewall can avoid some of the cybersecurity concerns that others face when wanting corporate-wide visibility.

For standalone plant sites, the system is considered a “local, on-prem installation.” Installed in this arrangement, the system retrieves real-time process data from the plant’s distributed control system (DCS) using industry-standard communication protocols: either OPC (Open Platform Communications), which enables secure data exchange between industrial equipment from different manufacturers, or Modbus, one of the oldest and most widely used industrial protocols for connecting electronic devices. The system can then communicate notifications back to the DCS, displaying them either on a new monitoring graphic or in the form of alarms.

“Here the only absolutely required site changes are to support data communication setup,” explained Tuttle. “It’s great to have additional sensors and greater resolution, but we can commonly do quite a bit with existing signals. We also commonly build our own HMI [human-machine interface] available only within the same network level for more in-depth information display.”

For corporate-wide visibility, Taber prefers to avoid the direct DCS link, and rather, communicates with a historian system such as PI or something similar. “This way the established process data pathway for historical system information retrieval is used and no other pathway is needed that involves that critical system,” said Tuttle. “At this level, more information access across the organization is obviously supported, and a suite of HMIs and displays are generated for various levels of the organization to interact with. Sometimes this requires a few additional data points be added to the existing DCS-to-historian path, but that’s generally the extent of changes required.”

Tracking Performance

Quantifying the value of a PdM program presents a unique paradox, that is, its success is measured by the absence of events, specifically, equipment failures that never happened. While traditional maintenance metrics can count repairs made or man-hours worked, PdM’s true value lies in invisible victories—the bearing that didn’t fail during peak demand, the transformer that didn’t overheat during summer, or the turbine that didn’t require emergency maintenance (Figure 2). This makes building a business case simultaneously essential and difficult, but not impossible. Key performance indicators (KPIs) offer a good place to start.

|

|

2. If a PdM program can prevent a turbine from being taken offline for repairs during peak season, it can literally save tens, if not hundreds, of thousands of dollars for a power company. Yet, quantifying events that didn’t happen is difficult. Source: Envato |

“The most important KPIs for our deployments are event identification and confirmed events,” explained Tuttle. “If or when an event occurs, did the PdM program identify it? And for each identified event, did the subsequent or recommended maintenance effort confirm it was accurately identified? The goal is, of course, that all events are accurately identified.”

In the end, this is really just confirmation that the system is reliable and effective. From there, when all the supporting information is provided, tracking cost and manpower savings is what provides users the confirmation that they’ve made a good choice in deploying the system. However, Tuttle agreed that it’s always difficult to characterize “what didn’t happen,” so this can take some time to accurately develop.

“If ever a customer receives a recommendation or an event is identified, but the customer doesn’t act on it for whatever reason and eventually a failure takes place, the potential cost savings had the event been acted upon is much more readily and precisely determined, and this is tracked,” Tuttle said. “Maintenance costs for an organization can be reduced 20% to 30%, basically shaving off the big maintenance costs due to failure, and stretching the preventative maintenance windows to lower the average. Equipment reliability increases, and unplanned downtime can be dramatically reduced, as much as 75%,” he added.

Taking the analysis a step further involves estimating a return on investment (ROI). “Many factors go into ROI on these PdM programs, things like scale of deployment, nature and age of the process, current maintenance program, and others. When the system is deployed at a site as the first real effort to establishing a good maintenance program, often times this is a place that’s been experiencing several supporting equipment failures in a year, and the ROI lands in the neighborhood of one year for a large site, maybe two years for a smaller site simply due to scale,” Tuttle supposed. “However, if that site is 30- or 40-plus-years old, we’ve seen instances that several long-developing problems are immediately identified, and the system has effectively paid for itself out-of-the-gate in identifying these and avoiding a looming major failure.”

For a site that is very active with a PdM program, the benefits come from reducing disposables cost and labor time (Figure 3). “This takes longer to add up and may be several years,” Tuttle presumed. “That said, if that same site is preparing for an overhaul or major outage and would typically spend a great deal on preventative maintenance, again, our system can provide confidence in forgoing some of the more costly efforts that aren’t necessary, and again, this can result in rapid ROI.”

|

|

3. Part of the value of a successful PdM program comes from eliminating unnecessary time-based inspections, which frees up workers time to do those jobs that are truly important. Source: Envato |

Real-World Losses

To get an idea of how much money can potentially be lost through inaction, Tuttle gave an example without mentioning names. “The closest example is from a site where we were consistently indicating air heater performance issues,” he said. “Unfortunately, because the site was nearing end-of-life, for several years no maintenance was performed to address this, being considered by some levels of the organization to be an unwarranted cost. The unit was forced to derate at full load by an ever-increasing amount, at its worst, by more than 5% consistently.”

Ultimately, conditions changed and the maintenance was performed. “The air heater’s condition was atrocious, just as the system had indicated for those several years,” explained Tuttle. “Simply in direct lost generation, millions of dollars went unrealized. When this is coupled with greater fan power consumption, reduced heat transfer performance, and other efficiency and process effects, it is very likely that not performing this maintenance cost tens of millions of dollars.”

Innovation Continues

Tuttle said one of the best things about Taber’s process and deployment platform, the Griffin AI Toolkit, is its inherent open system design. “This tool and our approach are, by design, fluid and adaptable to evolving industry demands and technology availability. We make a point to almost immediately integrate technological advancements as soon as they’re demonstrated as more reliable than simple theory, and to be familiar with and comfortable as a team with the industry and changing demands. So be assured that as advancements in approaches are available, they’ll be a component within our program, and that as the industry evolves, we’ll be there to support it,” he said.

Getting started with a usable PdM program is not an intensive effort. Even if a facility simply wants to “test the waters” and consider a single piece of equipment, that’s a good first step to evaluate the approach and performance of such a system and gain the experience that is often necessary to proceed further with system and scope expansions. “We all know how much maintenance costs, and since maintenance is a sure thing, any steps that can be taken to reduce those costs by simply adjusting procedures—effectively, no hardware or major system changes—are beneficial across the board,” Tuttle argued.

“At the end of the day, for us it’s about supporting our customers through fully customized systems. Whether this is through a simple single component system, or an enterprise-wide platform and program that integrates real-time data signals, personnel logging interfaces, maintenance and workorder system communication, and automated reporting and tuning. At whatever level a solution is needed, we have the capability and tools to turn it into reality,” he concluded.

—Aaron Larson is POWER’s executive editor.