Efficient liquid cooling supported by reliable instrumentation is essential for data centers to handle increased capacity demands in the age of artificial intelligence (AI) and other heavy compute applications.

The landscape of data processing has undergone a seismic shift recently, with today’s artificial intelligence (AI) and high-performance computing (HPC) applications requiring computational power at a scale that was once unimaginable. For example, modern graphics processing unit (GPU) chips require six to 10 times more power than their predecessors from just a decade ago. This surge in power consumption translates directly to additional heat generation, pushing traditional data center cooling methods to their limits.

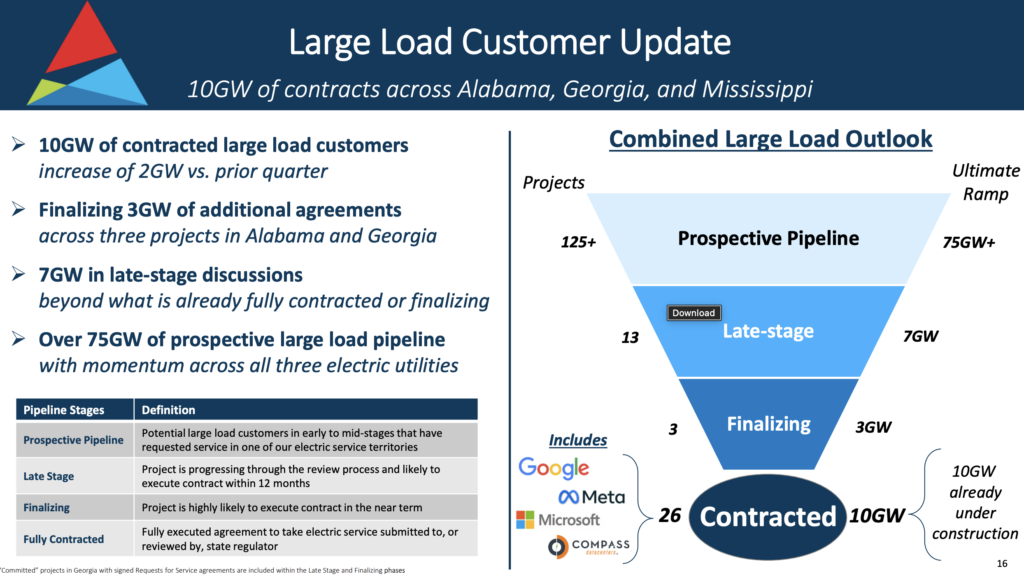

In response, the industry is increasingly implementing liquid cooling, a technology once confined to niche supercomputing, but now essential for mainstream hyperscale operations. Hyperscalers—the large-scale cloud service providers that form the backbone of the digital economy—are at the forefront of this transition. With massive, globally distributed data centers, typical hyperscaler facilities require between 100 MW and 300 MW of power and consume 1.5 million to 3 million gallons of water for cooling daily.

While air cooling was the default option in the recent past, modern liquid cooling is more efficient, providing a scalable solution to manage the intense heat loads of today’s enterprise-scale information technology (IT) infrastructure. However, liquid cooling systems are markedly more complex than traditional methods, requiring an array of reliable instruments, digital controls, and timely data to maintain optimal operation. This article describes the transition from air to liquid cooling, along with critical instrumentation components and design considerations to support successful scaling.

Traditional Air Cooling Yields to Efficient Liquid Cooling Methods

From the mainframe computing days to recent times, air cooling was the standard for dissipating heat in data centers, and it is still used broadly today. The approach is straightforward, consisting of cool air that is moved across heat-generating components, such as central processing units (CPUs) and GPUs, to transfer thermal energy away from the hardware.

Air is readily available, and the primary operational cost of air cooling is the electricity required to power large fans and air conditioning units. However, as computational density in data centers has skyrocketed—driven by AI accelerators and high-performance GPUs—traditional air-based cooling systems have become insufficient. These advanced chips generate far more heat in a compact footprint, surpassing the thermal limits of air. As a result, liquid cooling is increasingly necessary to efficiently dissipate heat and maintain performance capability.

These factors influenced the development of liquid cooling in data centers as a more efficient method for heat transfer. This approach uses a liquid medium—typically purified or treated water—which has a significantly higher specific heat capacity than air. Heat generated by high-density computing components is absorbed into the liquid and circulated through a loop within the white space of the data center. This warmed liquid is then transferred to a primary chiller loop that serves as the facility-level cooling backbone (Figure 1).

The primary loop includes large industrial chillers and heat exchangers that transfer heat to the external environment, enabling a continuous cycle of thermal regulation. This dual-loop design isolates sensitive IT environments from facility-level fluctuations while maximizing thermal efficiency and operational control.

Liquid cooling is fundamentally more efficient than its air counterpart at the point of heat transfer, enabling management of higher heat loads in a smaller footprint. While liquid cooling introduces the complexity of pumping and fluid management, its superior thermal transfer capabilities result in significantly improved overall energy efficiency and the ability to support high-density server racks that are powering modern AI workloads.

Scaling Liquid Cooling to Meet Growing Compute Demands

As chipmakers continue to pack more transistors onto smaller microchips, the heat generated per unit area—known as heat flux density—rises dramatically. This trend is driving increasingly efficient liquid cooling technologies at scale to meet the intensive demands.

Standard liquid cooling setups employ a cold plate that contacts the integrated heat spreader (IHS), which is a protective lid over the silicon die of a CPU or GPU. The heat path traverses multiple layers: from silicon die on the processing unit, through a thermal interface material (TIM), to the IHS, through a second TIM layer, and finally to the cold plate where it is absorbed by the liquid coolant. While effective, each layer adds thermal resistance, slightly impeding heat transfer.

In response, some newer designs use direct-to-chip (DTC) cooling to eliminate some of these intermediary layers, further improving cooling efficiency. DTC cooling systems use a specially designed water block that makes direct contact with the silicon die itself on lidless chips without an IHS. This removes a layer of thermal resistance, enabling faster and more effective heat dissipation.

This approach is critical for managing the extreme thermal output of the latest AI accelerators. By dissipating heat directly from the chip, data centers can support higher rack densities, maximize energy efficiency, and better handle HPC demands.

Reliable Process Instrumentation Underpins Successful Liquid Cooling Growth

As noted earlier, the shift to large-scale liquid cooling introduces new operational complexities that require high precision and reliability. The success of these systems depends on robust process instrumentation—the collection of sensors and measurement devices—supplying information to digital systems that monitor and control cooling loops.

Accurate instrumentation is directly linked to energy efficiency. For example, high-accuracy temperature sensors allow data center operators to run cooling systems closer to their optimal thermal setpoints (Figure 2). A smaller error band means less over-cooling is required to create a safety buffer, which reduces pump and chiller workloads and, consequently, lowers energy consumption. Water is a more expensive utility than air, so optimizing energy efficiency is even more critical for liquid cooling than for air cooling systems.

Flow measurement is equally critical for reliable operation because heat transfer depends on it. Its efficiency is often measured using the water usage effectiveness (WUE) key performance indicator (KPI). Today’s leading electromagnetic flowmeters for data center cooling processes provide precise measurement without requiring straight pipe runs, alleviating a past pain point and providing installation flexibility in the dense, space-constrained environments of modern data centers (Figure 3).

Furthermore, liquid analysis sensors are used to monitor water properties like turbidity, conductivity, and pH, which serve as proxies to verify effective water polishing and treatment operations (Figure 4). Operators use this information to ensure coolant remains free of contaminants that could cause corrosion or fouling.

It is best practice to install instrumentation provided with integrated diagnostics to further enhance reliability in data centers. When present, these smart components can proactively detect developing issues in a cooling system—such as pump cavitation or blockages—before they lead to failures that could cause catastrophic hardware damage. Enhanced diagnostics also facilitate differentiation between true and falsely generated process alarms by assessing instrument health from the real-time diagnostic data.

Hyperscalers require not only reliable measurement components, but also resilient supply chains, to keep up with rapid facility construction and increasing compute loads. For instance, it is not uncommon in this fast-paced industry for a hyperscaler to suddenly require thousands of turbidity sensors within a few months’ time to support a new deployment. Leading process instrumentation suppliers must be able to meet both reliability and volume requirements to effectively support data center operators. In short, hyperscalers benefit from manufacturing partners who are already hyper-scaled.

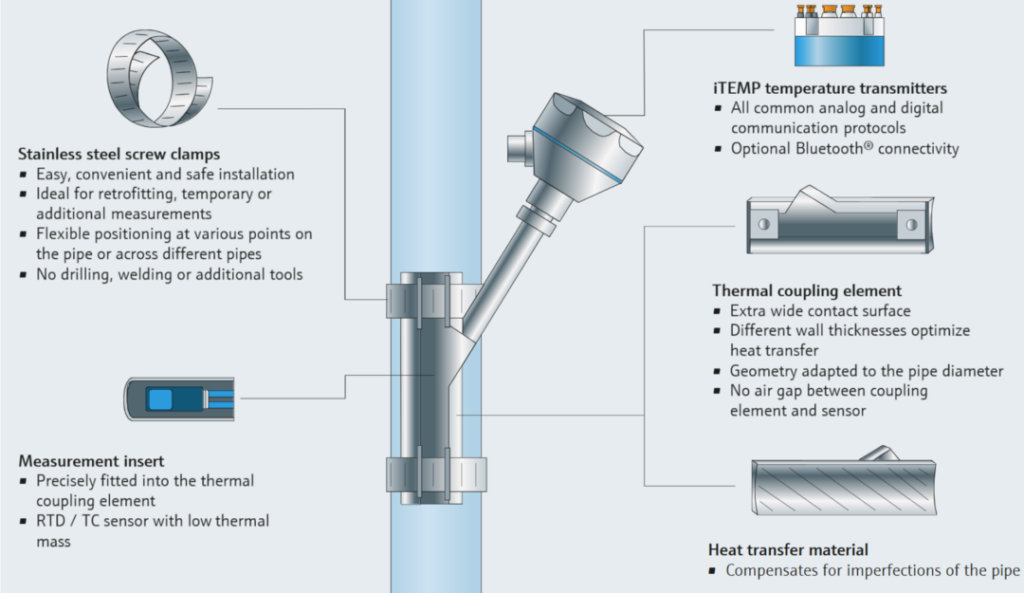

Use Case: A Non-Invasive Approach to Temperature Measurement

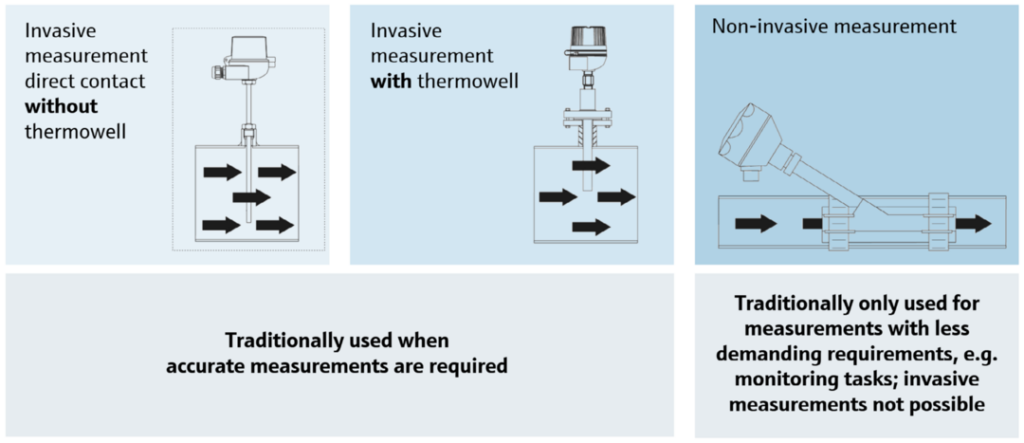

As data centers scale, the need for instrumentation deployment simplicity also grows to support increased production demands without introducing unnecessary risks. Many liquid cooling systems still use traditional thermometers installed in thermowells that penetrate process pipes. While effective, thermowells create flow disturbances that increase pump energy consumption and introduce potential leak points that risk contaminating high-purity liquid coolant. The Endress+Hauser TM611 eliminates these concerns with a mechanical clamp-on design, providing a direct and thermally conductive connection to the pipe, for comparable accuracy and response times to traditional invasive thermometers without the risks associated with pipe penetration (Figure 5).

This removes the need to index insertion depths or perform pipe intrusion resonance calculations, accelerating front-end design. Because this method utilizes material science and mechanical design to measure heat directly in the line, it is much more accurate than conventional non-invasive temperature instruments, with measurements that are often degraded by ambient surroundings (Figure 6).

This non-invasive technology is ideal for data center cooling loops where cleanliness and installation simplicity are critical. The absence of a thermowell eliminates the need for drilling and welding, while also alleviating additional energy-wasting pressure drops in the system for pumps to overcome. For hyperscalers deploying and upgrading systems at an aggressive pace, these types of installations provide a simple mechanical design, with reliable, low-maintenance, and efficient solutions to ensure uptime and performance in high-stakes deployments.

Growth at the Crossroads of Instrument Reliability and Supply Chain Resiliency

The relentless advance of AI and other compute-heavy applications has dramatically reshaped the power and cooling requirements of modern data centers. Liquid cooling is no longer a niche trend, but a necessary evolution from traditional air cooling to manage the escalating heat densities of high-performance hardware. However, deploying liquid cooling at the hyperscale level is a complex undertaking that is entirely dependent on a foundation of precise and reliable process instrumentation.

Leading temperature, flow, and liquid analysis instruments with integrated diagnostics are key enablers for simplifying design and increasing efficiency and resiliency in data center cooling solutions. Advanced instrumentation empowers operators to optimize performance, conserve energy, and protect multi-billion-dollar infrastructure investments. Because the demand for AI shows no sign of slowing, it is imperative for data center operators to partner with instrument suppliers who can provide high-quality and innovative products at the same scale, in addition to the supply chain resilience that enables adjustment at a moment’s notice. These layers of stability are critical for constructing next-generation data centers to meet HPC demands.

—Liza Kelso is a national business development manager at Endress+Hauser, and Cory Marcon is marketing manager for the Power and Energy Industry with Endress+Hauser USA.