The story of artificial intelligence (AI) is often told in the language of speed and efficiency. Every quarter brings claims of models that run faster and cost less. Yet behind the glossy benchmarks lies a more sobering truth: the appetite for AI is growing so quickly that even the most impressive efficiency gains cannot keep up. What looks lean at the micro level is swelling into a massive new load on our grids, threatening to outpace clean energy progress.

COMMENTARY

The global build-out of AI data centers is accelerating so fast that we are witnessing a paradox in real time: an efficiency revolution that risks driving overall consumption beyond sustainable limits. The temptation is to assume that making AI “greener per transaction” solves the sustainability dilemma. But efficiency does not equal sufficiency. If the number of queries increases ten- or one-hundred-fold (or more!), efficiency improvements may only slow the growth curve, not bend it downward.

Consider that a single, five-acre data center optimized for AI can consume up to 50 MW, a tenfold jump from traditional facilities. And it’s not just one facility. There are hundreds going up around the world. OpenAI, Oracle, and SoftBank recently announced five new U.S. AI data center sites under the Stargate platform, expanding planned capacity to nearly 7 GW and $400 billion in investment, and putting the initiative on track to meet its full $500 billion, 10-GW commitment by the end of 2025. Put that together and the numbers are staggering. By 2026, global data center electricity use is projected to exceed 1,000 terawatt-hours annually, more than some G20 nations consume in total. Efficiency gains are drowned out by absolute demand growth.

A Grid at the Breaking Point

Some projections suggest that, within a few years, AI alone could account for a sizeable share of national electricity use, exceeding 10% of total demand in certain regions. If nothing changes, that growth could throw off clean energy commitment timelines, slow progress on industrial decarbonization, and even push utilities to lean on older fossil plants. Nuclear power is also being reconsidered as part of the solution, but meeting demand will require renewables, natural gas, and battery storage.

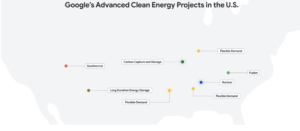

The implication is clear: efficiency is necessary but insufficient. To prevent AI from overwhelming the grid, companies must embrace demand management strategies that go beyond the server rack. That means sitting workloads in regions with abundant renewable supply, shifting computing tasks to off-peak hours, and investing directly in clean power procurement to match digital expansion with green generation.

Balancing Pressures

For companies diving into AI, the push and pull comes from every direction: the economic drive to innovate quickly, the environmental responsibility to keep emissions and water use in check, and the financial hurdle that can leave smaller players shut out altogether. Agentic AI delivers enormous capability, but the tradeoff is steep when every inference uses energy.

Frugal AI offers a way through these competing demands by helping organizations, large and small, use AI more efficiently and responsibly. By prioritizing high-impact use cases where AI is the best tool, it keeps energy use and costs in check while also ensuring sustainability is not sacrificed in the race for digital advantage.

What “Frugal AI” Looks Like

Frugal AI is not about abandoning innovation. It is about rethinking what progress means. Smaller, right-sized models often perform tasks just as effectively as their larger peers while using a fraction of the computing power. Traditional software, statistics, or simple regression may solve a problem that does not need a generative model at all. Asking “do we really need an agent here?” should be as routine as asking “is there a business case?”

Three rules can help organizations embed frugality into their AI strategies:

- Right-size every model: Not every task requires the latest frontier model. Choosing the smallest system that meets accuracy thresholds dramatically lowers carbon impact. Token efficiency and optimization of prompts can further trim energy waste, especially at scale.

- Deploy workloads where carbon intensity is lower: Location matters. Running models in data centers powered by wind, hydro, or solar can cut lifecycle emissions by orders of magnitude. By aligning AI expansion with regions rich in renewable capacity, enterprises help balance grid demand with clean supply.

- Match the method to the mission: Not every problem needs a large model, or even machine learning. Choosing the right approach, whether traditional software, retrieval-based systems, generative AI, or agentic AI, can reduce unnecessary compute and complexity. Retrieval-augmented generation (RAG), for instance, taps live data instead of retraining models, cutting energy use while boosting accuracy.

The gains are significant. By designing AI to be frugal, companies shrink the emissions tied to every model, spend less on energy, and get more years out of the systems they build.

An Energy Reckoning

Greater efficiency is only part of the story. On its own, it cannot resolve the conflict between AI’s promise and its growing energy appetite. With demand surging, the responsibility now falls on companies, regulators, and technology leaders to work in concert so that the tools transforming our world don’t end up straining the very infrastructure that supports it.

Frugal AI is not about restraint for its own sake; it is about ambition with responsibility. It is about designing intelligence that is lighter on the grid, easier on the planet, and ultimately more impactful for people.

—Jeff Willert is director of Data Science at Schneider Electric.