When artificial intelligence (AI) manages a power grid, it cannot operate as a black box. A wrong decision will not just frustrate users, it will trigger a blackout. This critical nature of AI deployments in energy is forcing AI to mature faster in energy than in any other sector.

When algorithmic decisions affect high-stakes systems such as grid stability, public safety, or national energy security, transparency is non-negotiable. But a decision you can’t explain is a decision you can’t trust.

This imperative has supported what may be the technology’s most important evolutionary stage: explainable AI (XAI) systems that provide clear reasoning for every decision while maintaining exceptional performance. XAI systems offer a way out of the traditional algorithmic “black box” approaches.

Grid operators must understand why an AI system recommends shifting power loads, energy traders need visibility into forecasting models, and maintenance teams require clear reasoning behind predictive recommendations. As regulators worldwide implement frameworks like the US NIST AI Risk Management Framework and the EU AI Act, energy companies’ existing experience with strict operational and safety standards positions them well to adapt to AI transparency requirements.

Proven Performance Through Accountability

The results of explainable AI deployment already demonstrate that transparency enhances rather than constrains performance. Explainable weather forecasting could increase wind energy’s economic value by 20% because operators could validate and refine recommendations. That is due in part to the fact that when humans understand AI reasoning, they make better decisions with studies showing that explainability improves performance by nearly 10%.

The reliability gains are equally compelling. Explainable AI in predictive maintenance for nuclear power plants reduces expected costs by 15%. McKinsey’s research on hydrocarbon operations confirms that auditable AI unlocks material production gains where opacity creates risk.

Four Pillars of Mature AI

Four foundational principles have emerged from the energy sector’s approach, creating a blueprint for responsible AI deployment across regulated industries.

The first of four principles, explainable decision-making ensures every critical recommendation comes with clear reasoning trails. Companies are deploying AI for energy operations that can deliver millions in annual maintenance savings while providing visibility into every optimization decision. This transparency enhances trust and enables continuous improvement by allowing human expertise to validate and refine AI recommendations. Yet explainability proves insufficient when data flows through third-party systems, driving the second principle: AI sovereignty.

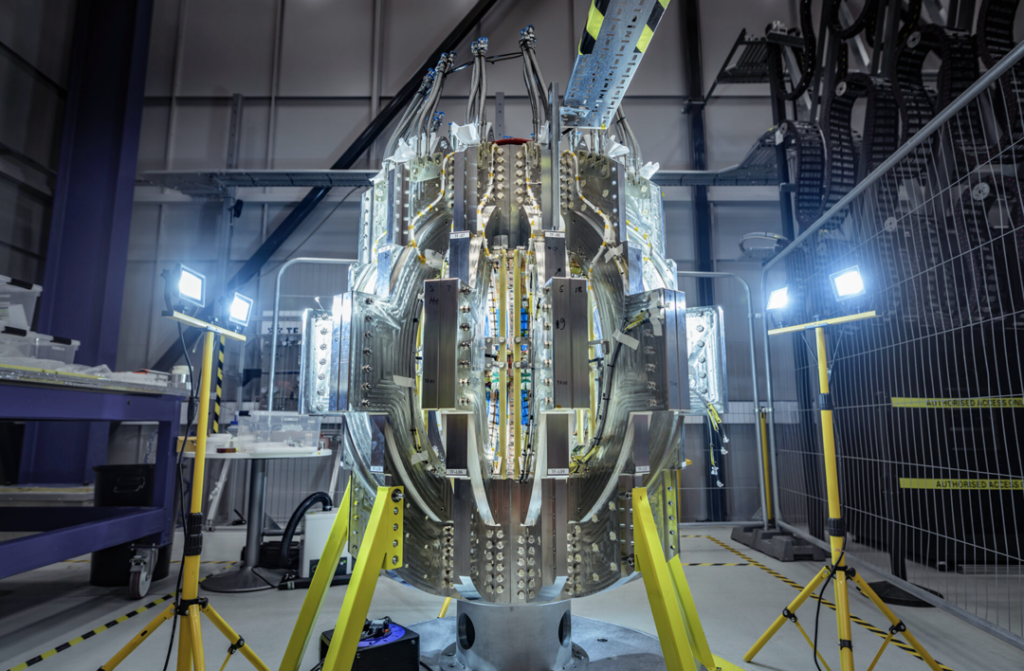

Complete AI sovereignty through integrated “chip-to-front end” systems—this means owning and controlling every layer of the technology, from the physical computing hardware up through the software and user interfaces—maintains complete control from underlying computing hardware through to user interfaces, ensuring sensitive data never leaves organizational boundaries. Domyn’s own platform enables organizations to truly own their AI systems through infrastructure that supports air-gapped deployment for the most sensitive pieces of energy infrastructure like nuclear facilities or the electricity grid.

Sovereignty alone cannot guarantee outcomes. The third pillar, human-AI collaboration, connects domain expertise with AI augmentation, empowering rather than replacing professionals. Domyn’s collaboration with NVIDIA demonstrates this approach in practice. Through specialist AI infrastructure deployment, the platform enhances engineers’ grid optimization and predictive maintenance capabilities, resulting in improved safety and reliability metrics.

The fourth pillar, transparent governance, creates comprehensive audit trails linking every AI decision to measurable outcomes and regulatory requirements. Advanced monitoring systems track all platform interactions, while sophisticated permission management ensures appropriate access controls. This is a competitive advantage in an era where regulatory compliance and public trust determine technological adoption.

These principles now shape AI deployment across critical sectors. Defense sectors adopt energy-inspired explainable AI standards for critical operations. Healthcare organizations implement sovereignty models for sensitive patient data. The rigorous standards developed for energy infrastructure are becoming the gold standard for AI deployment wherever reliability matters most.

The competitive advantages are becoming clear. Organizations following energy’s accountability-first approach achieve superior operational outcomes while building durable market positions. The sector’s emphasis on transparent, sovereign AI systems has the potential to deliver 5-10% reductions in global greenhouse gas emissions while creating technology that scales efficiently while maintaining trust.

Setting the Standard for AI’s Future

As AI becomes foundational to global infrastructure, from financial systems to healthcare to defense, the question is no longer whether AI will transform critical sectors, but whether it will do so responsibly. Energy has already answered: transparency enhances performance, sovereignty strengthens resilience, and collaboration delivers superior outcomes.

At the upcoming edition of ADIPEC 2025 (3-6 November), the world’s largest energy event, this framework will move from sector-specific practice to cross-sector imperative. As policymakers, company leaders and innovators convene, they will face a defining question: will AI transform critical infrastructure responsibly or recklessly

Energy has proven the answer. Grid stability lessons now inform diagnostic AI in hospitals, algorithmic trading in finance, and autonomous systems in defense. The sector that forced AI to grow up will teach the economy what maturity requires and what failure risks.

—Uljan Sharka is founder and CEO of Domyn, and an ADIPEC executive committee member.