Across the U.S., new AI-driven data centers are causing a significant increase in power demand. Carbon Direct projects that data center capacity in the U.S. will grow from roughly 25 GW in 2024 to 120 GW in 2030, a nearly five-fold increase that could make data centers a double-digit percentage of national electricity demand. In its recent Long-Term Reliability Assessment Report, the North American Electric Reliability Corporation (NERC), which oversees grid reliability in the U.S., flagged the expanding data center load as a major challenge.

While the exact amount of power that will be needed to meet this demand differs, one thing is clear: the U.S. power grid will need to add significant generation capacity to keep up. Building more generation and transmission capacity is an obvious solution, but an expensive and time-consuming one. As the race for AI dominance accelerates, a sober approach that balances speed-to-grid-connection while protecting grid reliability is imperative.

Balancing Speed-to-Grid-Connection & Protecting Grid Reliability

In order to ensure power continuity during periods of intense demands, jurisdictions are starting to put protections in place that will give grid operators the ability to protect grid operations ahead of serving data center needs. Texas, for example, recently passed SB6, which will allow the grid operator to cut data center power during peak or emergency periods if needed to protect the grid. It is expected that these types of grid protection policies will increase as the AI data center power consumption increases.

Power consumption on a grid fluctuates daily and seasonally, with peak periods often occurring during the hottest days of the summer and the darkest, coldest days of the year. For the rest of the year, there is largely available power generation capacity to serve potential new AI data centers. Grid operators face limitations in connections to new data centers because they cannot reliably serve data center loads during a few constrained hours on the hottest day of the year. A recent study found that if data centers could reduce their power consumption during these peak times (approximately 1% of the year), about 126 GW of unused slack capacity (unused generation capacity that can be tapped during non-peak periods), grid power would be available now.

An emerging concept, flex interconnection, proposes allowing AI data centers to connect to the grid immediately (aside from a few strained hours). This approach enables quicker grid connection without risking overall grid reliability. The need for speed for grid connections and maintaining grid reliability is forcing innovation in data center load flexibility.

Challenges with Conventional Load Flexibility: Demand Response & Self-Generation

Load flexibility, the act of decreasing power draw from the grid, falls into two categories. Demand response is where a large power consumer curtails operations on-site to decrease the amount of power they are drawing from the grid. Self-generation, also known as behind-the-meter (BTM) generation (which means generating power on-site), is where a large power consumer keeps their operations at a constant level, but turns on power generation to serve some or all of its load, having the net effect of reducing the amount of power it draws from the grid.

To date, conventional demand response programs have seen limited participation from the data center industry for two reasons.

- The data center industry has historically (pre-AI) required uninterrupted, “24/7” power.

- Data centers, which house multiple data users, often have contractual and operational constraints that limit their ability to reduce usage at their discretion.

Self-generation, on the other hand, is actively being used by the AI data center industry today, typically in the form of back-up diesel generators. These generators ensure around-the-clock power generation by turning on in the event of a grid power outage. The challenge, however, is that companies are pushing the generators in ways that neither the equipment nor regulations were designed for, causing significant local air pollution issues. These issues have now escalated into conflicts between AI data center operators and local communities.

Data Center Load Flexibility: Computational & Self-Generation Innovations

These delays to connect to the grid and grid reliability challenges are among several major challenges forcing the AI data center industry to innovate on the next generation of data center load flexibility. In the summer of 2024, two major initiatives were launched to advance integration between data centers and the power grid. First, the Electric Power Research Institute (EPRI) introduced its DCFlex program, which brings together data centers, utilities, and grid operators to collaboratively design data centers and utility programs that align with grid needs, while accelerating interconnection timelines. Second, the Department of Energy’s (DOE) Grid Reliability Innovation Program (GRIP) awarded funding to eight data center flexibility projects through its Grid Forward initiative, supporting advancements that enhance grid reliability and flexibility. These initiatives are already starting to show promising results.

Computational Flexibility

Traditional data centers, which support conventional IT services such as web hosting, enterprise applications, and cloud computing, primarily rely on central processing units (CPUs) and tend to have a relatively stable flat power demand. In contrast, AI data centers, which are specifically designed for artificial intelligence and machine learning workloads, consume significantly more power than CPUs. Moreover, AI data centers experience more variable power consumption because AI workloads can switch between ‘training’ mode, which is highly intensive and tends to have significant swings, versus ‘inference’ mode, which generally requires less power and presents as a more flat power load.

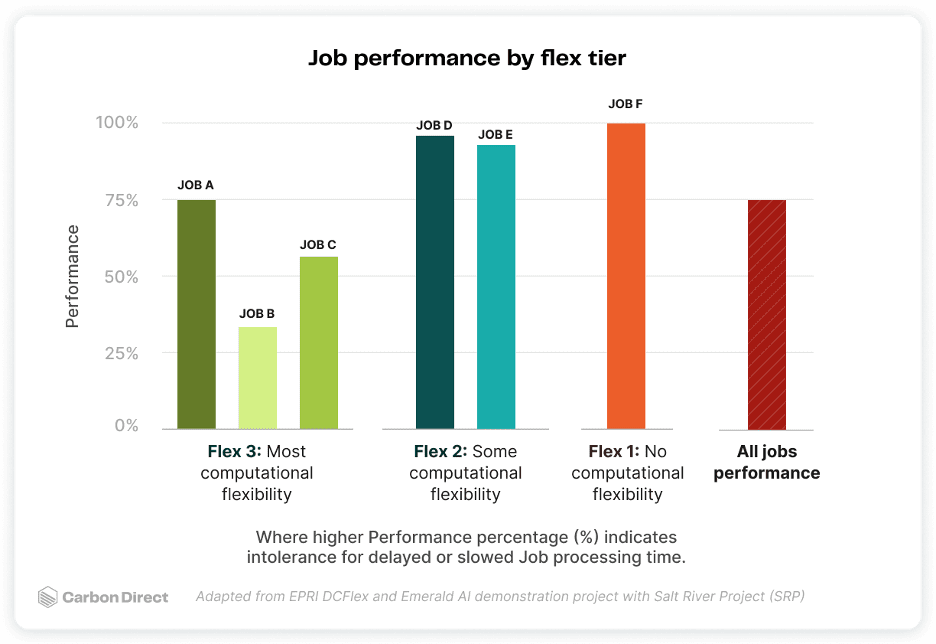

Earlier this year, Emerald AI, an NVIDIA-backed startup, released results from a computing flexibility demonstration project conducted through EPRI’s DCFlex program. This project was a collaboration among a data center operator (Oracle), technology providers (Emerald AI and NVIDIA), and an Arizona utility, the Salt River Project (SRP). The demonstration project categorized computing workloads into different computational priority tiers. According to Emerald AI, “Often, a small percentage of jobs are truly non-preemptible, whereas many jobs such as training, batch inference, or fine-tuning have varying priority levels depending on the user.” The tiers were defined as follows:

- Flex 1 — Critical jobs with no ability to slow or delay processing

- Flex 2 — Jobs with some flexibility to delay or adjust processing

- Flex 3 — Jobs with the most flexibility to shift processing times

On May 3rd, 2025 during a peak load period, the project successfully shifted computing workloads, keeping all the Flex 1 jobs running while shifting the Flex 2 and Flex 3 computational workloads, resulting in a 25% reduction in power consumption at the data center for three peak grid-constrained hours.

Self-Generation Flexibility

In addition to computational flexibility, a key area of innovation in data center load flexibility is self-generation. The industry must evolve quickly beyond relying on diesel backup generators as primary power sources, instead focusing on cleaner and more sustainable on-site power solutions such as renewable generation, traditional battery storage, microgrids, low-emissions fuels for generators, and long-duration energy storage technologies.

In 2024, the GRIP awarded funding to the Virginia Department of Energy and data center operator Iron Mountain to develop a microgrid, powered by battery storage systems, at Iron Mountain’s Prince William County site. If successful, it will help eliminate the need for the use of back-up diesel generators as primary power sources.

Protecting the Grid

The Verrus system, a spinout from Alphabet’s Sidewalk Infrastructure Partners, began testing its data center energy platform at the National Renewable Energy Laboratory’s (NREL) 70-MW data center. The Verrus system can shift 100% of the data center’s power demand from the grid to on-site resources within one minute of receiving a utility request. In addition to its utility and self-generation integrated system it is developing technology to optimize power use across circuits, improving power use efficiency and optimization behind the meter.

The accelerating growth of AI data centers is both a pressing challenge and a catalyst for innovation in the power sector. As grid constraints and connection delays push the limits of current infrastructure, collaborative initiatives like EPRI’s DCFlex and the DOE’s GRIP are demonstrating that data centers need not remain inflexible, always-on loads. Instead, through advanced workload management and cleaner on-site generation, these facilities are beginning to offer adaptable demand that supports rather than strains grid reliability. The industry’s next chapter will depend on how quickly utilities, regulators, and technology providers can work together to scale these advances, positioning data centers as partners in building a resilient and sustainable power grid for the future.

—Patti Smith is Electricity Decarbonization Lead at Carbon Direct.