Risk Management: Using Resilience Engineering to Develop a More Reliable Workforce

All power generating companies and plant operators value reliability, but they may be paying too little attention to a critical variable: people.

One million. That’s the approximate number of people in the sky over the U.S. as you read this article. Remarkably, all will land safely. Aviation achieves among the highest reliability of any industry. How did that happen?

The first significant step came with the launch of the modern jet airplane. However, the number of accidents leveled out to a still-unacceptable rate, despite dramatic improvements in mechanical reliability. Something else was occurring that prevented a further decrease in accident rate, so attention turned to other sources of failure—namely, flight crew actions. About 95% of aviation incidents are related to breakdowns in human interactions and understanding. The implementation of Crew Resource Management began in the 1970s after a series of deadly accidents and was a global standard by the late 90s; Highly Reliable Organizing and Resilience Engineering followed five to 10 years later, increasing reliability to its current levels.

The interesting part about this is that reliability is about people—their knowledge, decision-making, and interaction. That means reliability gains can be made at relatively low cost.

Now picture a power plant control room in California, right across the bay from the San Francisco airport and in the landing approach to Oakland airport. In the middle of the room, sits an operator staring at a bank of screens. Every so often he moves to check another screen or get a cup of coffee. It’s quiet… on the verge of boring… then all hell breaks loose! Alarms blare, screens fill up, the operator’s heart races. Everything he didn’t want to happen just happened: The plant tripped.

Plant trips result in tangible costs to power companies that are easy to calculate, such as lost generation revenue and penalties. The intangible costs can be staggering and include wear and tear on equipment due to a severe thermal transient. Likelihood of equipment failure is high, posing safety risks as equipment fails around you. Under certain conditions, a plant trip can bring down a portion of the grid. So how do you prepare a team to handle the unexpected with minimal damage?

The operations manager from the plant in California said, “We know we’re going to make mistakes; it’s what we do to prevent them from happening again. In the event that we trip, the key is making sure we don’t wreck a $30 million turbine.”

What he described is called “failing safely,” a concept from Highly Reliable Organizing and Resilience Engineering. Highly reliable and resilient organizations are typically found where high risk and high effectiveness coexist, such as wildland firefighting and aviation.

Highly Reliable Organizing and Resilience Engineering Basics

Most quality and safety systems are designed around factory work, where “[v]ariability is the enemy of quality,” as quality management guru W. Edwards Deming famously pronounced. But power plant work is not like factory work, where employees assemble the same components, over and over. Instead, it is complex and inherently variable (Figure 1). When work is variable, controlling and constraining actions—through highly detailed procedures, for example—can do more harm than good. An approach known as Highly Reliable Organizing (HRO), which is achieved through resilience engineering (RE), can offer a more effective alternative.

HRO and RE are actually two different philosophies from two different groups of people that began about the same time (roughly 10 years ago) and developed in parallel. There is some overlap and some differences. In working with them at Calpine, we’ve created a blend.

First, a couple of definitions:

■ Reliability is effective operations over a period of time. Reliability is focused on reducing failures.

■ Resilience is the ability to succeed under varying conditions. Resilience is focused on spotting things going wrong in time to correct course and recover or minimize damage. Resilience engineering pays attention to both failure and success. Resilience is a system property.

The first book on HRO, Managing the Unexpected, was written by Karl Weick and Kathleen Sutcliffe in 2001 after studying safe operations on the flight decks of aircraft carriers, nuclear power plants, and air traffic control centers. According to Weick and Sutcliffe, “[t]he common problem faced by all three was a million accidents waiting to happen that didn’t.” They found these common characteristics:

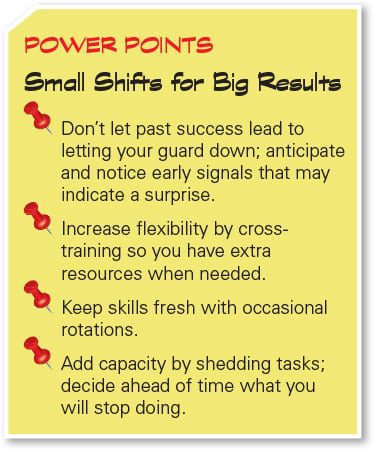

■ Preoccupation with failure. Small signals are seen as possible signs of bigger trouble, we talk about mistakes we don’t want to make, and we are operationally vigilant.

■ Reluctance to simplify. We deliberately create a complete and nuanced understanding of situations. For example, when facing a high-risk situation that’s similar to something we’ve experienced before, we probe the differences.

■ Sensitivity to operations. Leadership is close to the front lines with eyes and ears wide open. We want to know what is actually happening where people could be at risk. We interpret close calls as signs of danger rather than as a success we can move on from.

■ Commitment to resilience. We know that no matter how well we do, there will be surprises. We prepare to handle these events in a way to return to normal operations with minimal harm.

■ Deference to expertise. Decisions migrate to experts. Expert knowledge is tapped at point and time of need. Rigid hierarchies are viewed as slow and vulnerable to errors.

The field of resilience engineering was invented by a group of safety experts who noticed that some things about traditional safety didn’t make sense considering what we know about cognition, complex systems, and work.

For example, studies have shown that we are emotional rather than rational in decision-making and are blind to much of what happens around us. “Being complacent” is a natural state of mind as conscious awareness is fleeting (our minds are prone to daydreaming, mental replay, perceptual tunneling, and so on). This is why admonishments to “be careful” and “pay attention” don’t always work. It didn’t make sense that expert operators became temporarily incompetent. There was a whole lot of work going on that went exceptionally well because of —not despite—the good efforts of people doing the work.

Safety expert Erik Hollnagel noted that resilient organizations have four abilities:

■ Ability to respond to regular and irregular variability, disturbances, and opportunities.

■ Ability to monitor what happens and recognize if something changes so much that it may affect the organization’s ability to carry out its current operations.

■ Ability to learn the right lessons from the right experiences.

■ Ability to anticipate developments that lie further into the future, beyond the range of current operations.

To “engineer” resilient systems, we design according to these principles:

■ Principle 1: Variability and uncertainty are inherent in complex work.

■ Principle 2: Expert operators are sources of reliability.

■ Principle 3: A system view is necessary to understand and manage complex work.

■ Principle 4: It is necessary to understand “normal work.”

■ Principle 5: Focus on what we want: to create safety.

This introduction to the approach continues with details concerning the first two principles. The other principles will be covered in a future article.

Principle 1: Design for Variability

Don’t worry. There is no need to ditch existing systems and practices—they are our starting point. Here are a few examples.

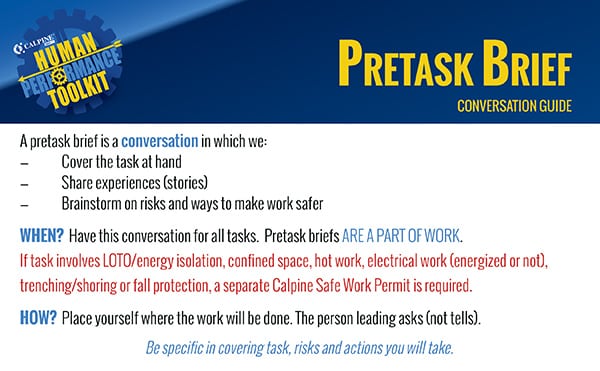

Planning Work. Is your Pretask Brief / Job Safety Analysis / Job Hazard Analysis / Safe Work Permit a “check the box” process filled out by one person and then put away before starting the “real” work? If so, stop. You are missing the boat. It’s not that checklists themselves are somehow bad. They’re not. Aviation, for example, also uses checklists in a rigorous way. But checklists alone don’t go far enough in preparing for the unexpected.

Let’s apply the theories and principles outlined above to preparing to work safely (Figure 2). We want to collaborate and include people who bring different points of view and probe risks with open-ended questions. Think about how responses to the open-ended question, “What are your concerns?” differ from responses to the closed question, “Do you have any concerns?”

|

|

2. Meaningful briefs. Consider using pretask briefs that require more engagement from more people than the standard checklist. Courtesy: Calpine Corp. |

We learn from success and failure and avoid assuming everything is just like when we did it before; we ask, “What’s different?” We talk about what we will do if we notice the situation changing.

The concept of “sunk costs” heavily influences our decisions, in sometimes negative ways. If we are part of the way down the road, most of us keep going, because it’s not our nature to stop or turn back if we have invested time and effort toward reaching a goal—unless we make the decision of where we will stop before we start. Given this bias, it is important to build “off ramps” where it is okay to turn back. In aviation, this is a go-around for a different approach on landing. Ask, “How could we fail?”

Managing Risk. Resilience engineering departs from traditional risk management in three key ways:

■ Planning for risk. We prepare for the general shape of risk and discuss in advance how we will change our strategy if the risk level changes.

■ Noticing risk. We approach work with intelligent wariness, paying attention to general signs that a situation is risky or that the risk profile of a system, such as an outage, is changing.

■ Responding to risk. We identify critical steps (points of no return where something really bad can happen) and we manage those steps more rigorously, for example, getting peer checks and being more deliberate with our actions.

We can teach ourselves to notice risk through language: “We’ll be really careful” or “I’ve never seen…” or “This will just take a minute” or language that indicates uncertainty (such as “maybe,” “not sure,” or “should be OK”). If we hear (or think) any of these phrases, we should stop and regroup. Escalate, do another pretask brief, and take the time to think through the risks before proceeding.

We can learn subtle, critical cues that experts notice when a situation isn’t right. Part of this is surfacing what these cues are through interviews because expert users sometimes don’t even realize what they are detecting. A good place to mine critical cues is by sharing near-miss stories (“I heard the other machine start but didn’t think anything of it until it was too late,” “I felt air blowing past my ear and I knew hot oil was not far behind”). What do you tell others to watch out for?

Planning for the general shape of risk is contrasted with traditional, detailed risk assessments such as Failure Modes Effects Analysis (FMEA), where specific risks are defined, categorized, ranked, and matched with corresponding risk management actions. Planning for the general shape of risk accepts that the future is not completely knowable and that things we never expect to happen happen all the time. We don’t need to predict exactly what could happen to determine what could help us. Help generally comes in two forms: extra capacity and flexibility. The key is to prepare before we need it.

When we treat work as variable, we do a lot of cross-checking and collaboration to make sure we get it right. Shift turnovers are a perfect example, because the way information is communicated can be as important as the information itself.

For example, in healthcare, shift changes used to review patient care in alphabetical order by the patient’s last name. If your name was Arthur Zimmerman and you had a mystery disease, you were out of luck. Hospitals changed the process so that the patient with the most complex situation went first.

Shift change serves to help people understand and make sense of what’s going on with the system (beyond the components they are individually responsible for). Deviations are explicitly mentioned and discussed; no assumptions are made that anything is “probably OK.” The role of incoming operator is as important as that of the outgoing operator, with the expectation that both question, ask for clarification, and cross-check.

Principle 2: Develop Expert Operators

Expert operators are sources of resilience. When you believe this, then everything and everybody is focused on learning. The way that we learn is through having experiences and telling or hearing stories about each other’s experiences.

Humans remember stories better than other forms of information. Stories change behavior— especially if we get caught up in them such that we can imagine being there. Studies show that our brain activity lines up with the storyteller’s, and memories that are made are almost the same as our being there. The Wildland Fire Lessons Learned Center (wildfirelessons.net/home) has examples of different ways to use storytelling for learning.

Gather Stories. Collect stories about incidents where serious injury or damage could have occurred, and group them into common themes for a newsletter. Share these short narratives, including details, so people can imagine themselves in the situation (Figure 3). What did they think was happening? What did they see, hear, smell, or feel? These hints that something was amiss are likely critical cues that can help us notice risk. The Aviation Safety Reporting System offers a great example of how to gather and use short narratives in its Callback newsletter: http://asrs.arc.nasa.gov/.

To surface these stories, ask:

■ What experience do you always share when you’re working with someone new?

■ Could you tell us about a time you or someone else almost got hurt or we almost wrecked something to the point that it scared you?

■ What is one experience where you learned an important lesson—one that keeps you or someone else from getting hurt?

Take Every Opportunity to Learn. Make After Action Reviews a way of life. Do them all the time, but especially if people were surprised, something bad or really good happened, or when you have new people. In an After Action Review, ask:

■ What was expected to happen?

■ What actually happened?

■ What surprised us?

■ What went well and why? (What should we do the same?)

■ What can be improved and how? (What should we do differently?)

■ What did we learn that would help others?

Gain Experience with Surprise. Pay attention to interrelationships in the system. Simulate, walk through, and drill. We are talking about low-tech table top exercises and scenario games here, preferably led by those who have actually done the work. A plant trip, which has the potential to expand into a failure cascade, is a great scenario to practice. What would happen if multiple units or pieces of equipment went down at once? What patterns have we noticed in past failure cascades? How will we deal with alarm floods?

Simulation is fundamental to development of air traffic controllers and pilots. They train to deal with the unexpected because the unexpected happens all the time. They go through refresher training every six months, where they handle weather events, hijacking, planes in distress, and system failures. They practice reacting (not discussing) because they have to make decisions quickly—there’s no time for others to weigh in. Going to plan B is normal; when they get to plans D, E, or F, they know they have a real problem. Does this sound familiar?

Shift to Better Manage Risk

Significant gains in human performance can be made through slightly altering existing tools and practices. By shifting perspective, including what we pay attention to, we manage risk better. All of this leads to high reliability and profitability. The best part is that these philosophies make sense. We breathe a sigh of relief and embrace our humanness—with all of our flaws and, most of all, our strengths. ■

—Beth Lay ([email protected]) is director human performance at Calpine Corp.