EPRI Bridges Industry R&D Gaps

The technologies used to generate and distribute electricity will be radically transformed during the coming decade. Amid that change, the power industry must continue to meet customer reliability, safety, and cost-of-service expectations. Achieving the right balance among these often-conflicting goals is the primary focus of every utility. The Electric Power Research Institute is helping utilities achieve that balance with R&D programs for many new and emerging technologies.

The Electric Power Research Institute (EPRI) is an independent research and development (R&D) organization focused on solving the power industry’s most challenging technology, system reliability, environmental, and safety problems. In addition, EPRI provides direction and research support for emerging technologies of interest to the industry. EPRI members generate more than 90% of the electricity produced in the U.S., and international membership extends to 40 countries.

EPRI’s goal is to develop a comprehensive vision—broadly shared within the electricity sector, as well as by its stakeholders—of the innovations necessary to realize the industry’s opportunities and develop a comprehensive plan to overcome the associated challenges.

EPRI is working closely with more than 1,400 industry and public advisors and its Board of Directors to bring that vision to life. It has identified six key innovation and research paths that address both the opportunities for and challenges to the continued delivery of reliable, affordable, and environmentally responsible electricity: Long-Term Operations, Near-Zero Emissions, Renewable Resources and Integration, Water Resource Management, Energy Efficiency (End-to-End), and the Smart Grid. Included in the discussion below of each category are descriptions of several interesting projects and a short project status report.

Long-Term Operations

Long-term operations (LTO) research seeks to maximize the substantial value of existing generation, transmission, and distribution (T&D) assets. Obtaining the maximum operational lifetime from current assets will provide an essential economic buffer in the utility planning process. For example, high capacity factors and low operating costs make nuclear power plants some of the most economical, reliable, and environmentally responsible power generators available. Similarly, reliable, long-term performance of current fossil generation and T&D assets is essential to achieving a stable transition to a future electricity supply system.

Protect Nuclear Plants from Cyber Attack. In 2010, various media outlets reported that a computer worm called “Stuxnet”—designed to hijack control systems—had infected an Iranian nuclear fuel enrichment facility. This potential vulnerability highlights the need for more safeguards as nuclear plants replace old analog electronics-based instrumentation and control (I&C) systems with programmable digital systems. In October 2010, EPRI released a cyber security guideline that will help nuclear plant managers ensure that new digital systems comply with federal regulations designed to keep nuclear reactors safe from cyber attacks.

Cyber security is not a new concern. In the wake of the 2001 terrorist attacks, the U.S. Nuclear Regulatory Commission (NRC) mandated that nuclear power plants consider cyber security threats that could expose the public to radiation. The NRC also requires nuclear plants to maintain grid reliability. Therefore, the EPRI guideline also addresses systems that, if hacked, don’t directly pose a radiological risk, such as balance-of-plant control systems.

EPRI’s cyber security guideline provides procedures for implementing cyber security controls in 138 different areas, from passwords and wireless connections to encryption and intrusion detection. The document also provides four increasingly complex examples of how to apply the procedures in an operating nuclear plant. The examples range from a simple, firmware-based programmable relay without digital connections to other systems to a main turbine generator control system with digital assets both in the control room and on the turbine floor. Although demonstrating compliance with all controls requires extensive documentation, many controls can be addressed with existing plant programs such as the design change process and configuration management program. Plant owners and operators who incorporate cyber security into the design process early can make the final assessment of digital systems a much simpler process.

Because a variety of cyber security recommendations already exist, instead of developing controls and procedures from scratch, EPRI researchers built on existing guidance. The EPRI guideline can help plant managers prepare for that assessment and ensure a reliable transition to new digital I&C systems. This year, the team will release a training module outlining how to use the guidelines. The training will provide a brief, multimedia overview of the guidelines and procedures.

Monitor Equipment Health. EPRI has established proof of concept on the use of transient analysis methods for detecting indicators of performance degradation and incipient failure in electric motors and pumps before traditional online monitoring techniques can detect such degradation. Ongoing research is creating a generic methodology for health monitoring during startup and other transients to enhance prognostics, condition-based maintenance, and failure prevention for power plant equipment. Initial development is focused on electric motors, with field testing scheduled for 2012.

Current equipment-monitoring techniques, including advanced pattern recognition, continuously process steady-state sensor data to look for anomalies that may represent precursors of degradation, aging, and failure. However, components often don’t fail during steady-state operation, but during startup, load change, and shutdown cycles, when they are under more stress. Traditional monitoring techniques cannot easily detect anomalies during transient operation. In 2010, EPRI initiated exploratory research to determine whether certain failure precursors may be stronger—and more easily detected—during transients as opposed to during normal conditions.

EPRI assessed existing mathematical methods and algorithms that could be adapted to transient analysis for a variety of high-value components, such as electric motors, electric pumps, and steam turbine generators. Then, in proof-of-concept work at the University of Tennessee, data collected during previous run-to-failure tests of electric motors were analyzed using transient feature extraction techniques.

The EPRI study identified key transient data, including electrical, speed, and vibration signals, and developed prototype prognostic models. Notably, aging-related changes and performance degradation not detectable in high-speed motors under steady-state conditions were shown to be clearly evident in startup data. As a result, pertinent insights on remaining useful life and time to failure were available sooner than is possible using conventional methods.

Current EPRI research is creating transient analysis methods and models for online monitoring using real-time operating data from laboratory-scale motors and pumps. Once the methods are developed and host plants are identified, field testing of the transient analysis toolkit will begin in 2012 on full-scale, in-service components. Commercial vendors are expected to apply this novel prognostic approach to improve anomaly detection and remaining life assessment for a range of power generation and delivery system components.

Laser Welding for Nuclear Repairs. As nuclear reactors age, more neutrons are generated, and transmutation of elements occurs in the reactor internals and reactor pressure vessel. This increased “neutron flux” gradually increases the helium level in reactor internal components and reduces the material’s weldability via helium-induced cracking. The extent of cracking is heavily dependent on the heat input used for welding.

One area susceptible to such cracking is the riser brace attachment in boiling water reactors. Conventional arc welding techniques typically are not viable for repairing such components because their high heat input (millions of watts) would result in too much heating of the base metal. In contrast, laser welding, which operates at energy levels of only about 800 watts, provides the precise heat input control needed to avoid helium-induced cracking and excessive weld metal dilution. In addition, solid state laser technology is particularly promising for repairing reactor internals because it can be delivered to remote locations via optical fiber.

EPRI has been evaluating laser welding for several years, and for a variety of applications. Initial research focused on weld overlay of cracked components. Utility interest ultimately led to the first known application of laser technology in a nuclear setting: Eskom’s Koeberg plant in South Africa used laser welding in 2010 to mitigate through-wall cracking in an outdoor stainless steel storage tank. In early 2011, EPRI’s Welding and Repair Technology Center acquired and installed a 2-kW fiber laser system. A fiber laser is a compact, high-powered device that delivers a laser beam through an optical fiber, providing focused energy control.

An essential element of the research program will be to demonstrate the ability of laser welding to successfully repair irradiated material samples. Although EPRI can do much of the research to assess the process viability of the laser welding approach, full commercial viability can only be obtained using irradiated materials from a test reactor. EPRI is collaborating with the U.S. Department of Energy (DOE) to evaluate a hybrid laser welding process for repairing highly irradiated materials under EPRI’s Long-Term Operations Program and the DOE’s Light Water Reactor Sustainability Program. In addition, EPRI and the DOE are constructing a hot cell at Oak Ridge National Laboratory that will perform welding experiments on irradiated materials used to construct reactor internals and vessels.

Assess Concrete Integrity. Concrete is a key element in the reliable generation and delivery of electricity, and is found in everything from cooling towers to used fuel storage facilities and transmission infrastructure. The material is generally resistant to aging, becoming stronger over time as it cures. But high temperatures, freeze-and-thaw cycles, and exposure to radiation and chemicals can damage even the sturdiest concrete structures. Not every sign of wear and tear is visible, so EPRI is working to develop tools that can help see flaws deep inside a structure without damaging it—so-called nondestructive evaluation (NDE) methods.

NDE tools exist for other materials, such as metals. However, seeing inside concrete without damaging it is especially difficult because the material is a heterogeneous mix that varies with the composition of the local aggregate used. As plants apply to extend their operating licenses beyond 40 years, the need for new tools to test the integrity of dry cask storage containers, used fuel pools, cooling towers, containment buildings, and other structures will grow. Several NDE techniques have already been developed, and EPRI is working to design new ways of detecting and characterizing potential damage.

In 2011, EPRI installed fiber-optic strain gauges on some concrete structures at Ginna Nuclear Power Plant in upstate New York to measure real-time strain on the steel cables running through pre-stressed concrete. This included the concrete containment building. Engineers at Ginna periodically pressurize and depressurize the containment building to make sure it’s not leaking. During the most recent such test, EPRI researchers also measured the strain induced by such tests using digital image correlation.

In a separate project, EPRI engineers are examining how radiation can damage concrete in the reactor cavity’s walls and vessel supports, which may lead to the development of new NDE techniques to characterize such damage.

EPRI will be investigating how corrosive materials such as chlorides and boric acid affect concrete. Boric acid is found in used fuel pools at pressurized water reactors, and chloride damage can come from seawater or other sources. Researchers at the Materials Aging Institute in France—a collaborative R&D institute funded by EPRI, the French utility EDF, and the Japanese utilities Tokyo Electric Power Co. and Kansai Electric Power Co.—have already begun looking at the effects of boric acid on concrete. At the same time, the commercial sector is searching for new NDE methods to image voids, cracks, and other internal defects. The most promising techniques will likely be tested in the field in the next five years.

|

| 1. New light shines. Advanced laser welding techniques are being evaluated by EPRI for repair of cracked nuclear components. Courtesy: EPRI |

Near-Zero Emissions

The industry must develop technologies capable of obtaining near zero-emissions (NZE) levels for all current and anticipated pollutants, including CO2. Those technologies must be affordable, have minimal impact on unit operations, and be achievable during flexible operations (plant cycling). The R&D challenges to achieving NZE fall into three categories: energy conversion (minimizing emissions in the energy conversion process), environmental controls (capturing emissions during the conversion process), and advanced generation (developing highly efficient, low-emitting power production technologies).

Improve Mercury Capture. Working closely with the DOE and the power industry, EPRI has conducted extensive mercury control research. One leading mercury control option is the coincidental capture of mercury by selective catalytic reduction (SCR) and flue gas desulfurization (FGD) devices, which are designed to reduce emissions of nitrogen oxides (NOx) and sulfur dioxide (SO2), respectively. The SCR converts much of the mercury to a soluble oxidized form that is then captured by the FGD.

For plants without those emissions controls, injection of fine-powdered activated carbon into the plant’s flue gas can reduce mercury emissions. The activated carbon captures the mercury and is then collected downstream in the particulate control device along with the plant’s fly ash. EPRI’s field tests and survey of activated carbon injection installations at various plant sites aided understanding of this technique’s capabilities, identified potential issues with its implementation and operation, and documented successful mitigation options.

The use of commercial activated carbon can cost a power plant millions of dollars each year in carbon cost. EPRI and the Illinois State Geological Survey developed the “sorbent activation process,” or SAP, which enables power plants to produce activated carbon on site using the coal they are firing. Power plants that produce the activated carbon they need on site can eliminate storage and inventory costs. EPRI’s laboratory and field prototype testing showed that the sorbent activation process produced activated carbon comparable to that of commercial sources. Preliminary economic analyses based on these tests predict that SAP can cut the cost of activated carbon by more than half.

But cost is just one of the challenges to successful implementation of activated carbon injection. Activated carbon can contaminate fly ash, rendering it unsuitable for use in concrete. In addition, the technology can increase emissions of particulate matter in plants with small electrostatic precipitators. EPRI has developed a technology called TOXECON that addresses these issues. In the TOXECON system, the activated carbon is injected into the flue gas after it passes through the particulate control device. An additional baghouse downstream captures the activated carbon and mercury as well as any fly ash that escapes the primary particulate control. This configuration segregates the ash collected in the primary particulate control device from the carbon collected in the downstream control device. About a dozen power plants have so far adopted this technology.

Develop New High-Temperature Materials. Advanced ultra-supercritical plants have the potential to reduce fuel consumption, carbon dioxide emissions, and flue gas emissions as well as saving utilities money. But the steel alloys typically used to construct steam turbines and boilers aren’t designed to withstand ultra-supercritical temperatures and pressures.

A decade ago, the DOE and the Ohio Coal Development Office, together with Energy Industries of Ohio, selected EPRI to be the technical leader of a consortium of U.S. steam turbine and boiler suppliers and national laboratories. The goal was to identify and test alloys that would enable steam turbines and boilers for advanced ultra-supercritical coal-fired power plants to operate at 1,400F. Since then, this consortium has tested a number of different alloys that can withstand these harsh conditions.

For the steam boiler portion of the project, components of concern are the boiler headers and piping, superheater/reheater tubes, and waterwall panels. The first step was to identify new alloys.

The crucial limiting factor of these materials is their inherent creep strength. “Creep” is the tendency of solid materials to deform when exposed to high temperatures and pressures for long periods. Materials in an advanced ultra-supercritical plant must have a 100,000-hour creep-rupture strength of approximately 14,500 psi or higher. The boiler components must also be able to withstand the corrosive conditions produced by high-sulfur U.S. coals as well as avoid steam-side oxidation and exfoliation. The EPRI-led team of government and industry researchers has identified several nickel-based alloys as promising candidates. They evaluated aspects of the candidate materials in seven areas: mechanical properties, steam-side oxidation, fireside corrosion, welding, fabrication ability, coatings, and changes to current design codes.

For steam turbines, the project focused on the highest temperature components in four areas: oxidation and erosion resistance of turbine blades, nonwelded rotor materials, welded rotor materials, and castings. Materials and design philosophy for steam turbines are unique to each manufacturer. Alloys are not subject to code approval and thus may or may not have internationally recognized material standards.

The boiler materials development project is scheduled to end in September 2012, and the steam turbine materials development project will end in 2014. As a next step, components made from the most promising alloys will be tested in an operating plant before a commercial-scale 600-MW demonstration plant is constructed.

Develop Laser Sensors to Monitor Emissions. Currently, there are a limited number of point measurements of oxygen at the economizer outlet and measurements of NOx across the SCR inlet and outlet. These point measurements are not always representative of the bulk flue gas concentration of the species being monitored and limit the degree of uniformity that could be achieved through process control optimization.

Improved paired measurements of carbon monoxide (CO) and oxygen (O2) and paired measurements of nitric oxide (NO) and ammonia (NH3) in the flue gas of coal-fired boilers have the potential for several benefits. For example, they could enable optimization of the air/fuel distribution to individual burners, thereby enabling lower excess oxygen operation, reduced NOx emissions, and improved unit heat rate. Combined NH3 and NOx measurements could similarly enable optimization of NH3/NOx distribution at the inlet of an SCR reactor, enabling increased NOx reduction performance while maintaining ammonia slip targets.

A recent EPRI-sponsored study designed, lab-tested, and field-tested prototype laser-based sensors for measurement of CO and NO at typical coal-fired boiler flue gas conditions at the economizer outlet. This technology could allow line-of-sight average measurements that are more representative of the bulk flue gas concentration of the species being monitored and improve the degree of uniformity that could be achieved through process control optimization.

Prototype absorption sensors for CO and NO in the combustion flue gas at the economizer exit of a coal-fired boiler were designed using the results of fundamental spectroscopy and lessons learned from laboratory experiments. First-generation prototype sensors for CO and NO were constructed, tested in a laboratory, and evaluated in a field measurement campaign on a 300-MW tangential design boiler. The results of this initial proof-of-concept field test campaign were used to design improved, second-generation sensors, which were evaluated in the laboratory and then used during a second field measurement campaign at the same facility.

An important consideration for practical sensors includes performance over longer path lengths, which would entail additional transmission loss and more absorption, as well as the construction of more robust and optimized sensors. For the CO sensor, higher-power lasers will be essential to ensure sufficient transmission. For remote monitoring in temperature-controlled environments, such as monitoring with near-infrared laser systems that use ammonia refrigeration, a more stable, low-loss fiber delivery capability over long distances is desired for the mid-infrared 2.3 µm wavelength. For the NO sensor, where quite high-powered lasers are available, a more economical and robust laser at one of the selected wavelengths is needed to reduce the sensor cost.

Measure Low Concentrations of Mercury. It is expected that many coal-fired utilities will be required to continuously measure mercury concentrations at <1.0 μg/m3. But little, if any, data is available as to the validity of monitors to measure at low levels.

A recent EPRI study evaluated at pilot scale the variability of two continuous mercury monitors (CMMs) when measuring mercury at low concentrations (<1.0 µg/Nm3). The study was funded by EPRI and the Illinois Clean Coal Institute, the DOE, and the Energy & Environmental Research Center’s Center for Air Toxic Metals affiliates.

Tests were conducted with CMMs manufactured by Tekran and ThermoScientific, which are the two CMMs most widely used by the utility industry. The variability of the instruments was determined and compared to a reference method (Environmental Protection Agency Method 30B—sorbent traps).

The tests involved two weeks of firing natural gas and utilizing mercury-spiking systems. Various levels of elemental mercury and mercury (II) chloride were added to the combustor. The tests were repeated, but this time with hydrogen chloride and SO2 being added. For each of the test conditions, at least one set of four sorbent trap samples was taken simultaneously.

For the third week, an Illinois eastern bituminous coal was fired in the pilot-scale combustor. To reduce the mercury concentration to μg/Nm3, flue gas was passed through an electrostatic precipitator, then a high-efficiency fabric filter, and finally a wet lime-based scrubber. Again, at least one set of quad sorbent trap samples was taken per day.

At the completion of testing, the data was statistically analyzed to determine the variability associated with the various parts of the process and to determine the true lower limit of quantification for each CMM.

Renewable Resources and Integration

Renewable energy is fundamentally changing the electricity industry’s strategic landscape. Some projections indicate that by 2030 renewables could account for more than 20% of the electricity generated and delivered globally. To affordably and reliably generate and integrate renewable resources, the electricity industry will need innovative solutions to address critical challenges, including these:

- Enable renewable generation technology options—wind, water, solar photovoltaic, solar thermal, biomass, and geothermal energy—that are cost-competitive over the long term with other low-carbon forms of power generation.

- Maintain electric grid reliability with high penetrations of variable wind and solar energy.

- Understand and minimize environmental impacts of renewable energy resources on a large scale.

Develop Single-Well Geothermal Systems. The vast majority of existing geothermal power plants draw energy from reservoirs of steam or high-temperature liquid water in permeable rock. Production wells bring the fluids to the surface, where their heat is converted to electricity through a steam turbine generator. These systems typically are risky and expensive because they require exploration and characterization of the geothermal resource to determine its viability. This often necessitates the drilling of deep wells.

In 2009, EPRI began exploring a novel, closed-loop approach for mining heat from dry rock formations while avoiding the drilling of injection and production wells. A single-well engineered geothermal system technology could accelerate energy capture from regions where there is heat but no liquid.

One such system was evaluated by EPRI. The concept relies on using existing wells, such as abandoned oil or gas recovery wells, or commercially failed (crippled) geothermal wells. To create energy, power producers would pump their own liquid into the well through a closed-loop process, bring that heated liquid back to the surface, and transform the heat into electricity using either commercial binary-cycle or reverse air conditioning technology. The single-well system employs a down-hole heat exchanger with a specialized heat transfer fluid to maximize heat exchange.

Using some of the vast inventory of abandoned or underused wells to capture underground heat could eliminate some of the risk associated with geothermal energy. Power companies can measure temperatures at the well bottom without having to invest several million dollars to drill the well first. Adapting single-well systems could also expand the geothermal resource. Existing geothermal power plants must be located above moderate- to high-temperature geothermal resources where there are reservoirs of steam or liquid water in permeable rock. These conditions exist in a few relatively small geographic areas of the world. Recoverable geothermal resources that rely on hot dry rock, on the other hand, are estimated to exceed 500,000 MW of generating capacity and offer deployment potential of 100,000 MW by 2050.

In a recently published report, EPRI researchers examined the amount of energy that could be extracted using single-well geothermal systems and how much such systems would cost. They modeled down-hole components and analyzed the potential of drilling, conduction, heat transfer fluid, and fluid enhancements for maximizing heat extraction. Their work suggests that a single-well system will be optimal if it is installed in a fractured or porous medium in a highly saturated condition. Single-well geothermal technology could be ready for commercial application in new, depleted, or abandoned oil and gas fields in just a few years. Individual wells could have a potential yield of 0.5 MW to 1 MW.

Detect Wind Turbine Blade Flaws. Wind turbine blades are expected to last millions of operating cycles. However, flaws in turbine blades—either production defects or defects that arise during operation—can cause cracks or other damage, leading to expensive repairs. In severe cases, flaws can lead to catastrophic failure of the blade and damage to the wind turbine.

The primary method for examining wind turbine components is visual inspection. However, this method cannot detect flaws that lie beneath the blade’s surface. To address this challenge, EPRI is working to develop NDE techniques for wind turbine components, including blades. One method capable of examining large surfaces is laser shearography, a technique used in the aerospace industry. In 2010, EPRI researchers demonstrated in the laboratory the feasibility of using laser shearography to assess the health of wind turbine blades.

Defects in wind turbine blades that lie beneath the surface produce slight inconsistencies in the continuity of the blade’s surface. These changes are not visible to the naked eye. Laser shearography relies on a shearography camera with a built-in laser. The laser illuminates the blade’s surface, and the light reflected off the blade travels into the camera, which relies on “interference patterns” to detect flaws. The technology produces a three-dimensional image of the defect.

EPRI researchers recently tested laser shearography on a wind turbine at the National Renewable Energy Laboratory’s (NREL’s) test facility in Golden, Colo. Using this technology, the team detected a large delamination on the high-pressure side of a blade that had not been previously detected. After 25,000 cycles, a crack appeared along the edge of the delamination. During subsequent fatigue cycles, this crack grew until the test was stopped at 2.4 million cycles.

Laser shearography has advantages over other NDE techniques. First, it is one of the few techniques that can be employed once a blade has been installed. Second, EPRI research indicates that the device may work even when mounted on the ground, eliminating the need for a crane or inspector. Third, the laser can scan a relatively large area—on the order of 43 square feet—enabling operators to conduct inspections quickly.

As part of this three-year project, EPRI researchers plan to test laser shearography in the field. Improved shearography techniques will result in more thorough structural assessments of blades by making it easier to detect flaws. In addition, EPRI plans to collaborate with Sandia National Laboratories’ Blade Reliability Collaborative in the development of flaw and degradation analysis models. These models will help wind turbine owners and operators determine whether blades with defects must be repaired or replaced. Some flaws may not pose a threat to the structural integrity of the blade (Figure 2).

|

| 2. Detecting blade faults. Using laser technology, structural faults in wind turbine blades can be detected before failure occurs. Courtesy: EPRI |

Detect Bats Near Wind Turbines. The spinning blades of wind turbines can cause significant bat kills, especially when migratory species are on the move. A recent paper projected that turbines in the Mid-Atlantic region could be killing 33,000 to 111,000 bats each year by 2020. Concerns about bat mortality have put some wind power projects on hold, while the economic viability of others is threatened by mitigation strategies such as seasonal curtailment of operations.

EPRI is developing an automated bat protection system that will use an array of ultrasonic microphones mounted on the turbine to detect the calls bats use to navigate and find prey. When the “bat protector” detects bats, it will automatically slow or stop the turbine blades.

The project’s first objectives were to select the best commercially available ultrasonic microphones and identify the optimum number and configuration of microphones around the turbine. EPRI used recorded calls from big brown bats and small-footed bats—species found throughout North America—to evaluate existing echolocation detection hardware and software under turbines at the Cedar Creek Wind Resource Area, a 200-MW wind farm in Colorado. EPRI researchers used ground-mounted transmitters and receivers to test the system’s capabilities at various distances and in different configurations. The receivers could detect the calls 90% of the time when the transmitter was less than 15 feet away. The angle of the transmitter also influenced the receiver’s ability to detect calls.

As a next step, EPRI researchers will test the microphones by mounting them on a functioning GE wind turbine at NREL. Mounting the system on the turbine will test the ability of the hardware and software components to distinguish bat calls from rotor noise. Bats aren’t common near Golden, so the team will use a remote-controlled device to broadcast the calls of silver-haired bats, hoary bats, and eastern red bats—those species most at risk—to test the microphone’s capabilities.

If all goes well, the team will collaborate with a host utility to conduct further field testing. Assuming that the tests prove successful, EPRI plans to collaborate with a turbine manufacturer to incorporate bat detection as a control system input (Figure 3).

|

| 3. Bat detective. New technology will be able to distinguish bat calls from rotor noise in order to slow or stop blades when bats are present. Courtesy: EPRI |

Combine Energy Storage and Wind Generation. As a result of the rapid expansion of wind generation, and transmission constraints in certain regions, utilities are experiencing substantial curtailment of wind power. Due to transmission issues and other reasons, one study found that the mean realized capacity factor for Europe over the past five years was below 21%, while preconstruction expectations were in the 30% to 35% range.

The ability to store wind energy could help utilities maximize wind farm capacity factors and reduce or eliminate curtailment, provide dispatchable capability for wind generation, increase the operational flexibility of isolated grids, facilitate higher wind penetration, and reduce generation portfolio fuel burn.

A recent EPRI study explored the potential benefits of directly integrated energy storage (ES) and wind generation. The study compared the potential use of several different ES technologies and calculated the general profitability of the preferred option for a particular location. This study provides quantitative analysis of the potential for the integration of onsite ES and wind generation. The ability to store wind energy could provide higher wind farm capacity factors.

In the first phase of the two-phase project, the project team identified the most promising ES options available at this time. Technologies investigated included many battery systems (for example, sodium sulfur batteries), pumped hydro, compressed air energy storage (CAES), and hydrogen or ammonia production for end use. CAES technologies were found to be the preferred options.

In the second phase, the team conducted a high-level engineering and economic analysis to investigate the profitability of the preferred ES option for a particular application and location. The location was a specific existing wind farm in the Midwest, and the one-year study period was May 1, 2010 to April 30, 2011. The analysis included capital costs, operating costs, and determination of CAES plant dispatch and revenues from participation in the energy and ancillary market of the Midwest Independent Transmission System Operator.

Further potential value, not attributed to the CAES plant in this current financial analysis, includes potential investment tax credits for the CAES plant capital costs, benefits to the wind farm owner such as increased production tax credits, higher nodal locational marginal prices during congestion or high-wind periods, transmission asset benefits and enhanced Independent System Operator (ISO) system flexibility (such as operation as a controllable load), reduced cycling of fossil plants in the ISO that have poor heat rates and higher maintenance costs, and facilitation of increased renewable energy penetration.

Water Resource Management

Water presents three strategic challenges and opportunities for the electric sector:

- Using less water for power production conserves a scarce resource for other necessary uses.

- Minimizing the environmental impacts on water uses for power production preserves environmental resources and protects human health.

- Using efficient electric technologies for water treatment, transport, desalinization, and industrial processes both reduces water demand and conserves electricity.

EPRI has research projects under way in all three categories.

Develop New Utility Water Policies. Because power plants rely on large quantities of water for cooling and other normal operations, they often face pressure to reduce freshwater use. EPRI researchers are developing a prototype decision support system called Water Prism that can be used to analyze current and projected water demand and supply in a geographical area such as a watershed. This software will help power generators compare different water management strategies and determine whether these strategies will lead to sustainable water use.

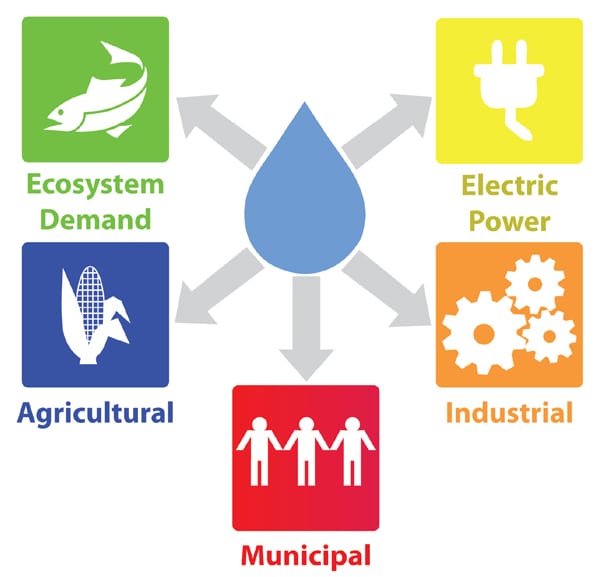

Water Prism compares total water demand from all sectors—including electric power, agricultural, municipal, industrial, and the ecosystem—to total available freshwater supply. The program takes into account not only current demand and supply but also projections for the next 50 years. Power companies and other sectors can then explore the impact of different water-saving strategies, such as using effluent from a wastewater treatment plant or agricultural runoff, on total freshwater demand. By comparing those reductions with total supply, they can determine whether a given management strategy will lead to sustainable water use. The data required for Water Prism include climate, land use, current water withdrawal and discharge records, and projected water demands.

Other models exist to assess various water management strategies, but Water Prism’s graphics, which are similar to the graphics used in EPRI’s Prism analysis for greenhouse gas emissions, make it easy for stakeholders and the general public to grasp the results. The software represents water use in each sector as colored bands, which combine to form a prism equal to the total water use by all sectors, allowing analyses of different water management strategies.

EPRI researchers plan to complete a working prototype of Water Prism by the end of 2011 and are testing and refining software using data from the Muskingum River watershed in Ohio, an 8,000-square-mile area with five electric power plants (Figure 4).

|

| 4. Prism vision. Power companies can use EPRI’s Water Prism in house to help develop their own water use strategy or to site new plants. Alternatively, they can use the analysis and resulting strategies to lead broad efforts with other stakeholders to develop a unified water use plan for an entire region. Source: EPRI |

Reduce Cooling Tower Evaporative Loss. In the U.S., thermoelectric power withdrawals account for approximately 40% of freshwater withdrawals and approximately 3% of consumption. In specific regions and during drought years, this rate of water withdrawal may not be available. Looking ahead, increasing growth pressures and tightening regulations are anticipated to further drive industry needs to reduce water use and consumption. Innovative water reduction technologies are therefore needed to enhance long-term operational performance and new plant citing options, particularly in water-constrained regions.

For typical Rankine-cycle steam plants with closed-cycle wet cooling, the majority of freshwater use (~90%) is for cooling. Reducing water requirements for thermal power plant cooling represents the best opportunity for in-plant water conservation. As existing water-saving cooling technologies have high capital costs, energy penalties, and operations and maintenance impacts, the development of new, cost-effective cooling technologies has been the focus of EPRI’s Water Use and Availability Technology Innovation Program.

Based upon responses to an initial request for information in 2011, EPRI is launching research on several technologies that have the potential for significant water efficiency gains at both fossil and nuclear units, including the development of heat absorption nanoparticles for reducing cooling tower evaporative loss.

This novel cooling technology consists of a nanoparticle with an outer ceramic/metal shell and an internal phase-change material core which, when added to a coolant fluid, significantly increases both its rate of heat transfer as well as its heat capacity. This “nanofluid” with enhanced thermal properties would result in reduced flow rates, reduced evaporation and drift water loss, and overall power plant water consumption reductions of up to 20%. The technology has the added appeal of being broadly applicable to new and existing cooing systems and, in concept, it could be relatively cost-effective to retrofit to existing cooling towers.

This unique heat absorption nanoparticle concept and the underlying technology were developed at Argonne National Laboratory (ANL). EPRI is launching a project with ANL to further develop and demonstrate a working nanofluid using these particles that can be utilized specifically for power plant cooling needs. Computer models will optimize the thermal properties of nanoparticles needed for such applications, and then laboratory synthesis and pilot testing will further optimize the candidate nanoparticles.

The process also will be developed to mitigate any potential for environmental losses of the nanoparticles, as well as any negative material degradation consequences of the nanofluid itself.

Finally, prototype testing of an optimized nanofluid will be performed with a scaled-down cooling tower and condenser system, and an economic feasibility study for a 500-MW power plant will be conducted.

If these breakthrough project goals are met, larger-scale demonstrations of the heat absorption nanoparticles technology could be conducted through specific utility application–focused programs at EPRI, such as the Advanced Cooling Technology or Advanced Nuclear Technology programs. EPRI plans to develop a suite of such water-conserving power plant cooling advancements throughout the next several years in order to expand regional- and utility-specific options for long-term operations and new plant construction.

Form the Water Research Center. Water management restrictions for all types of electric generating units continue to increase due to rising water costs, stakeholder pressure, and/or new regulations. Regulatory changes may include the following:

- Water withdrawal taxes to force conservation and reuse.

- Mandated limitations on water withdrawals, including prohibition against once-through cooling.

- Permit changes and/or revisions to the Steam Electric Effluent Guidelines.

- Zero liquid discharge (ZLD) mandates.

EPRI has formed a research collaborative with Georgia Power Corp. and Southern Company Services to support the transition to a future that limits traditional water intake volumes by developing a Water Research Center (WRC) that is holistically focused on these issues. The WRC is located at Plant Bowen in Cartersville, Ga. Research will focus on meeting future water and wastewater restrictions and enabling sustainable water use practices in utility operations.

The WRC also will serve as a facility to conduct research performance evaluations of new technologies and technical approaches to ensure that they are properly vetted. This project is the first step in reaching these goals, by planning and developing the WRC as an industrywide resource at which potential end users, vendors, and resource agencies can test new technologies cost-effectively, using specialists in the area and standardized/uniform protocols.

The WRC will provide an infrastructure and specialist staff for testing tools and technologies aimed at reducing water consumption and developing/demonstrating cost-effective treatment technologies for potential wastewater contaminants. Projects could include:

- Wastewater treatment (FGD discharge and low-volume wastewaters)

- Zero liquid discharge

- Advanced cooling

- Moisture recapture and other techniques to reduce water needs

- Degraded water use

- Disposal management for water treatment/ZLD solids

- Water balance and monitoring tools

A project kickoff meeting was held in November 2011. Some projects already are under way, and construction of the new WRC facilities is expected to be completed in late 2012.

Energy Efficiency (End-to-End)

Energy efficiency is widely acknowledged as a resource to help maintain reliable and affordable electric service, reduce emissions, and save resources. Efficiency goals are mandated in 24 states and are under consideration in others. The continued development and adoption of energy efficient technologies and best practices is essential to realizing these goals. Utility energy efficiency activities have focused on incentives to end-use customers to adopt relatively mature technologies, most notably compact-fluorescent lamps. However, realizing the resource potential of energy efficiency requires the development and availability of a wider variety of efficient end-use technologies in homes, buildings, and industrial facilities.

Realizing the full potential of energy efficiency also requires a more holistic view upstream of the end-use realm, including power generation and delivery. Improvements in the efficiency of auxiliary loads in power plants and in techniques to reduce T&D energy losses can yield significant energy savings within acceptable costs, but also require validation through extensive assessment, testing, and demonstration.

EPRI is conducting a multi-year energy efficiency demonstration project focused on six “hyper-efficient” electricity utilization technologies. These technologies may have the potential to reduce electric energy consumption in residential and commercial applications by up to 40% for each application. If fully deployed, these technologies could reduce the demand for electric energy between 10% and 20%. The six “hyper-efficient” technologies being demonstrated are:

- Variable refrigerant flow air conditioning (with and without ice storage)

- Heat pump water heating

- Ductless residential heat pumps and air conditioners

- Hyper-efficient residential appliances

- Data center energy efficiency

- Light-emitting diode street and area lighting

Improve Efficiency in Generation and Delivery. Energy efficiency can help meet the challenges of maintaining reliable and affordable electric service, managing energy resources, and reducing carbon emissions. While many utilities are encouraged by their regulators to engage in end-use energy efficiency programs, few consider options to reduce energy losses along the electricity value chain. In many cases, the efficiency gains that could be realized through measures to reduce T&D losses or reduce electricity consumption at power plants can be significant.

Recent EPRI analyses indicate that approximately 11% of electricity produced is consumed in the production and delivery of electricity itself by energizing auxiliary devices such as pumps, material handlers, and environmental controls, and through T&D losses. Based on 2010 estimates of electricity generation, this represents 450.7 billion kilowatt-hours of U.S. electricity generated, making the electric sector the second-largest electric-consuming industry.

The application of new technologies may have the potential to reduce electricity use in electric utilities by 10% to 15%. Even a 10% reduction is enough electricity to power 3.9 million homes. EPRI has identified technology options and changes in operating methods that can improve overall efficiency.

In power production, duty-cycle or capacity factor is the key driver that influences internal power use relative to unit output. In coal-fired power plants, the average internal power use across the sample used in EPRI’s analysis was 7.6%. In nuclear power plants, the average was 4.1%. Opportunities to reduce electricity use in power production may include advances in control systems for auxiliary power devices and the use of adjustable-speed drive (ASD) mechanisms. In addition, ASD installations often reduce CO2 emissions.

Electricity losses in power delivery total approximately 6.3%. In the distribution system, the use of efficient transformers, improved voltage control, phase balancing, and balancing of reactive power needs could substantially reduce electricity use. In the transmission system, opportunities include extra-high-voltage overlays, and transformer and line efficiency. In addition, there are a couple of other “discoveries” worth highlighting:

- Newer power plants are not necessarily more efficient than older plants, due principally to environmental requirements.

- Non-baseload operating plants have a particularly high potential for improvement by the application of ASDs on motors.

Other EPRI research shows that shifting loads from peak to off-peak hours provides significant improvement by reducing load flows on the T&D system during peak periods when losses are exacerbated, while also reducing cycling operation for selected generation units. Use of alternative energy sources close to load centers to supply energy requirements during peak periods also can significantly reduce T&D losses during the most challenging periods of operation.

Given the intensity of energy consumption in the industry’s own physical infrastructure, efficiency measures undertaken at a finite number of power plants or in the power delivery grid can potentially yield energy savings and carbon emission reductions more cost-effectively than traditional end-use programs targeted at buildings, residential users, and other industries.

Improve Data Center Energy Efficiency. Typical data center power delivery designs use alternating current (AC) power, typically distributed within the facility at 480V AC. This power goes through several conversions from AC to DC and back again. The power losses due to the use of inefficient power conversion devices from both outside and within equipment result in a large loss of useful electrical power. They also directly increase the energy required to remove the heat produced. Though estimates and actual measurements vary, the power utilization by information technology (IT) loads can sometimes be 50% or less of the total input power consumption.

Duke Energy and EPRI are working together in a demonstration project that focuses on DC conversion at the data center (or facility) level. The approach will convert the facility’s 480V AC into 380V DC and deliver it to the equipment racks via a 380V DC bus. The very best AC equipment can be deployed to improve power distribution efficiency, but that approach only squeezes some of the losses out of each component. The DC approach eliminates those losses completely, through the removal of the less-efficient AC components.

DC power distribution is an alternative approach to a conventional data center AC power scheme. Most data center server racks are not currently powered using DC, but the servers and storage arrays can operate with either AC or DC. Typical servers and storage arrays inherently convert an AC power source to DC within each power supply, which adds an additional power conversion loss. Using the DC powering approach, extra power conversion steps are eliminated, lowering losses, increasing reliability, reducing cooling needs and square footage requirements for data centers, and simplifying power supplies.

Testing of a DC power system at a Duke Energy data center in Charlotte, N.C., has revealed preliminary results that the system uses 15% less energy than a typical double-conversion UPS AC power system.

Smart Grid

The “smart grid” concept combines information and communications technologies with the electricity grid to increase performance and provide new capabilities.

Increasing use of variable generation and controllable loads, combined with an aging infrastructure, is a scenario where conveying actionable information to and from interactive markets, or monitoring asset health, will require greater use of information and communication technologies. Each utility will create its own smart grid through investments made in back office systems, communications networks, and intelligent electric devices.

Smart grid functional requirements, interoperability, and cyber security standards are still evolving, and premature technology obsolescence could strand some investments as transitional technologies need to be replaced before their expected end of life.

This strategic issue requires a holistic vision with end-to-end system considerations including transmission, distribution, and end use.

Launch “Protect the Grid” Initiative. The increasing interconnectedness, automation, and communication capabilities of the power grid pose several significant cyber security, resiliency, and privacy challenges. Security threats to the grid could come from deliberate attacks by terrorists and hackers as well as inadvertent user errors and equipment failures. Additionally, the dramatic increase in the granularity of data about end-user behavior raises several new privacy concerns. To achieve a secure and resilient grid, advances must be made in assessing and monitoring risk, architectures to support end-to-end security, legacy systems security, approaches for managing incidents, and technology to support privacy.

EPRI has launched the Security and Privacy Initiative, a collaborative effort to investigate cyber security standards, business processes, and technologies that can address these issues. This project, which will expand to become the Cyber Security and Privacy Program in 2012, also will develop technologies, best practices, and controls on data privacy.

EPRI also has partnered with the DOE to conduct cyber security research and analysis to support the National Electric Sector Cyber Security Organization. As part of this three-year public/private partnership, EPRI will determine how to mitigate risks from impending threats, harmonize cyber security requirements, and assess cyber security standards and technologies.

Assessing and monitoring the cyber security posture for energy delivery systems is vital to understanding and managing cyber security risk. As part of its R&D, EPRI is working with advanced metering infrastructure (AMI) vendors and utilities to identify a set of alerts and alarms that can be standardized to enhance AMI security event monitoring. The EPRI program in 2012 will continue this effort and also focus on network security management architectures for T&D systems. These activities will support the long-term objective of enhanced situational awareness across the domains of the power delivery system.

Increasing the security of next-generation energy delivery systems will require a combination of new security architectures, tools, and procedures that provide end-to-end security and support defense-in-depth features. The EPRI program will address this need by developing protective measures, such as key management, high-assurance architectures, and security testing tools to validate the level of protection. The program also will focus on reducing the security risk of legacy systems through the development of risk mitigation strategies, transition strategies, and the assessment of substation security solutions.

|

| 5. Low exposure. An EPRI researcher takes data on radio-frequency exposure from smart meters. These meters are typically part of a wireless mesh network consisting of approximately 500 to 750 home meters connected through a “cell relay” meter to local utility via a cellular wireless wide area network. The cell relay meter operates at a nominal power level of 1 watt. Courtesy: EPRI |

Although efforts to prevent and detect cyber incidents are important for the protection of control systems, they do not prepare for the eventuality of a cyber incident. Energy delivery systems also must be resilient to cyber incidents and continue to perform critical functions while under duress and during the recovery process. EPRI’s R&D is addressing part of this issue by developing guidelines and best practices for responding to cyber incidents on AMI systems. In the future, the EPRI program will support resiliency for the grid by focusing on decision support tools for responding to cyber incidents, as well as tools and techniques to support cyber security forensics.

Make the Smart Grid Interoperable. Interoperability is the ability of two devices or systems to exchange information and use that information to perform their functions. The vision of the smart grid is that millions of devices in different domains and with different owners will be able to exchange information. This exchange of information needs to happen with minimal integration cost and difficulty.

The lack of mature, uniform standards to enable interoperability among systems has been cited by utilities and regulators as a reason for not moving forward with smart grid applications such as AMI. Investments in proprietary systems risk long-term vendor lock-in and costly “fork-lift” upgrades to fully take advantage of new smart grid applications.

The key to interoperability is standards. The use of standards to integrate complex systems and components dramatically reduces both implementation and operational costs. But for devices and systems to communicate easily, they must speak the same language. Standards abound in the utility space, but they have different, sometimes overlapping domains. Some standards are mature and others are emerging.

Both technical and implementation gaps need to be addressed simultaneously. Existing technical standards for smart grid applications are incomplete and have not been broadly adopted by utilities, vendors, and third-party device manufacturers. Some existing standards need to be harmonized, and reference architectures and interface standards that will enable interoperability between equipment from different vendors will need to be developed. In addition, many of the existing standards do not address cyber security or device management uniformly.

Achieving interoperability is a huge undertaking that involves the active involvement of all stakeholders, including the federal government, standards development organizations, and user groups. All of these activities need to be coordinated and harmonized to enable data and information to be shared with the people and individuals who need it.

—Arshad Mansoor is senior vice president, research & development for the Electric Power Research Institute.